Key scientific focus

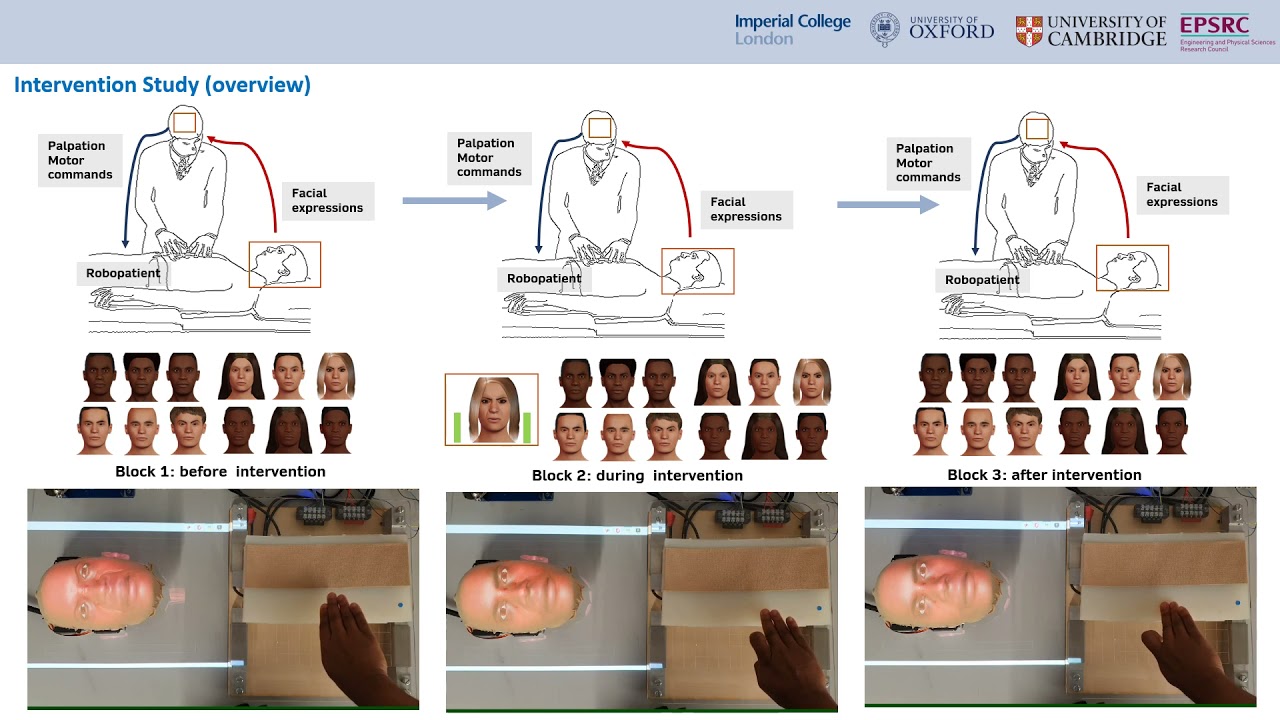

This UK EPSRC funded project will investigate how human participants innovate haptic exploration behaviours to detect abnormalities in a soft tissue given palpation force constraints. We focus on the application of training medical students to perform physical examinations on patients under constraints imposed by pain expressions conditioned by different gender and culture backgrounds.

Robo Patient project

- Team of investigators

- Background

- Transient parameters of facial activation units

- Related publications

- Media and workshops

- Dr. Thrishantha Nanayakkara (principal investigator), Dyson School of Design Engineering, Imperial College London

- Dr. Nejra Van Zalk (Co-investigator), Dyson School of Design Engineering, Imperial College London

- Dr. Mazdak Ghajari (Co-investigator), Dyson School of Design Engineering, Imperial College London

- Dr. Fumiya Iida (Co-investigator), Department of Engineering, Cambridge University

- Professor Simon Lusignan (Co-investigator), Primary Care Health and Sciences, Oxford University

Often primary examination of a patient by a General Practitioner (GP) involves physical examination to estimate the condition of internal organs in the abdomen. Facial expressions during palpation are often used as feedback to test a range of medical hypotheses for diagnosis. This leaves room for possible misinterpretations when the GP finds it difficult to establish a stable understanding about the patient’s background. This is a critical medical interaction challenge in UK where there is diverse gender and culture interactions in both GPs and patients.

Given the task of estimating the most likely value of a variable like the position of a hard formation in a soft tissue; humans (including examining doctors) use various internal and external control action such as variation of finger stiffness, shape and orientation of fingers, variation of indentation, and position and velocity control of fingers. We call this behavioural lensing of haptic information. A deeper understanding of how behavioural lensing happens under constraints is particularly important to improve the quality of training of physicians to develop robust physical examination methods on patients. In the case of examining a patient, behavioural constraints are imposed by pain expressions that can render diverse interpretations depending on the culture and gender context of the interaction between the physician and the patient.

Robustness of medical examination can be improved by introducing new technology assisted tools during medical education. Clinical sessions in medical training involve demonstrations from an experienced GP trainer on real patients. However, it is difficult to provide a consistent range of examples across different student groups because the lesson depends on the kind of patients available in the ward. On the other hand, patients resent repeated examination by students.

From left to right: Thilina Dulantha Lalitharatne, Sybille Rerolle, Yongshuan (Jacob) Tan, Thrishantha Nanayakkara

From left to right: Thilina Dulantha Lalitharatne, Sybille Rerolle, Yongshuan (Jacob) Tan, Thrishantha Nanayakkara

During physical examination of a patient, the physicians would gently palpate areas in the body suspected to be affected by some illness and use facial expressions of pain to locate and assess the level of the physiological condition. In the Robopatient project, we try to develop a robotic face that can provide such pain expressions during palpation. Facial expressions are given using facial muscle movement primitives known as facial activation units (AUs) such as eyebrow narrowing, eye lid tightening, nose wrinkling etc. However, the rate and the delay of response of these AUs when a palpation force is applied on a painful area are not known. This team conducts experiments with human participants to understand the most agreeable set of transient parameters in 6 AUs known to be most relevant to facial pain expressions.

Elyse Marshall demonstrating her haptic mouse to examine a virtual patient. Florence Leong developed an FEM simulation based surrogate model to project tissue stress information as an image on top of the vortual patient.

https://www.youtube.com/watch?v=X8LmQJV6YCg

Please see section on Haptic Exploration and Multipurpose Soft Sensors

- The Engineering article titled “RoboPatient combines AR and robotics to train medics“

- Workshop on Human-Robot Medical Interaction at the 2020 IEEE International Conference on Human-Robot Interaction.

- RoPat20 workshop at IROS 2020.

More details

- Team of investigators

- Background

- Transient parameters of facial activation units

- Related publications

- Media and workshops

- Dr. Thrishantha Nanayakkara (principal investigator), Dyson School of Design Engineering, Imperial College London

- Dr. Nejra Van Zalk (Co-investigator), Dyson School of Design Engineering, Imperial College London

- Dr. Mazdak Ghajari (Co-investigator), Dyson School of Design Engineering, Imperial College London

- Dr. Fumiya Iida (Co-investigator), Department of Engineering, Cambridge University

- Professor Simon Lusignan (Co-investigator), Primary Care Health and Sciences, Oxford University

Often primary examination of a patient by a General Practitioner (GP) involves physical examination to estimate the condition of internal organs in the abdomen. Facial expressions during palpation are often used as feedback to test a range of medical hypotheses for diagnosis. This leaves room for possible misinterpretations when the GP finds it difficult to establish a stable understanding about the patient’s background. This is a critical medical interaction challenge in UK where there is diverse gender and culture interactions in both GPs and patients.

Given the task of estimating the most likely value of a variable like the position of a hard formation in a soft tissue; humans (including examining doctors) use various internal and external control action such as variation of finger stiffness, shape and orientation of fingers, variation of indentation, and position and velocity control of fingers. We call this behavioural lensing of haptic information. A deeper understanding of how behavioural lensing happens under constraints is particularly important to improve the quality of training of physicians to develop robust physical examination methods on patients. In the case of examining a patient, behavioural constraints are imposed by pain expressions that can render diverse interpretations depending on the culture and gender context of the interaction between the physician and the patient.

Robustness of medical examination can be improved by introducing new technology assisted tools during medical education. Clinical sessions in medical training involve demonstrations from an experienced GP trainer on real patients. However, it is difficult to provide a consistent range of examples across different student groups because the lesson depends on the kind of patients available in the ward. On the other hand, patients resent repeated examination by students.

From left to right: Thilina Dulantha Lalitharatne, Sybille Rerolle, Yongshuan (Jacob) Tan, Thrishantha Nanayakkara

From left to right: Thilina Dulantha Lalitharatne, Sybille Rerolle, Yongshuan (Jacob) Tan, Thrishantha Nanayakkara

During physical examination of a patient, the physicians would gently palpate areas in the body suspected to be affected by some illness and use facial expressions of pain to locate and assess the level of the physiological condition. In the Robopatient project, we try to develop a robotic face that can provide such pain expressions during palpation. Facial expressions are given using facial muscle movement primitives known as facial activation units (AUs) such as eyebrow narrowing, eye lid tightening, nose wrinkling etc. However, the rate and the delay of response of these AUs when a palpation force is applied on a painful area are not known. This team conducts experiments with human participants to understand the most agreeable set of transient parameters in 6 AUs known to be most relevant to facial pain expressions.

Elyse Marshall demonstrating her haptic mouse to examine a virtual patient. Florence Leong developed an FEM simulation based surrogate model to project tissue stress information as an image on top of the vortual patient.

https://www.youtube.com/watch?v=X8LmQJV6YCg

Please see section on Haptic Exploration and Multipurpose Soft Sensors

- The Engineering article titled “RoboPatient combines AR and robotics to train medics“

- Workshop on Human-Robot Medical Interaction at the 2020 IEEE International Conference on Human-Robot Interaction.

- RoPat20 workshop at IROS 2020.

Contact the PI

Professor Thrishantha Nanayakkara

RCS1 M229, Dyson Building

25 Exhibition Road

South Kensington, SW7 2DB

Email: t.nanayakkara@imperial.ac.uk