Research overview

Robots have transformed many industries, most notably manufacturing, and have the power to deliver tremendous benefits to society, for example in search and rescue, disaster response, health care, and transportation. They are also invaluable tools for scientific exploration of distant planets or deep oceans. However, several major obstacles prevent to their widespread adoption in more complex environments and outside of factories. First, complex robots are fragile machines that often become damaged when operating in uncontrolled environments. While animals can quickly adapt to injuries, current robots cannot “think outside the box” to find a compensatory behaviour when they are damaged: they are limited to their pre-specified self-sensing abilities, which can diagnose only anticipated failure modes and strongly increase the overall complexity of the robot. Second, robots are limited by the assumptions of their designers. If a walking robot has to cross a terrain with an unanticipated surface, like snow, sand, grass, or ice; or if robotic manipulator has to grasp objects of unexpected shape, or firmness, the robot will likely be unable to accomplish such tasks

The objective of our research is to address these limitations with the use of learning algorithms. With this approach, robots can autonomously learn how to face unexpected situations like a mechanical damage or an unknown environment. Our research aims at improving the algorithmic foundations of learning algorithms to increase the versatility, resilience and autonomy of physical robots.

Research themes

Learning to face unforeseen situations

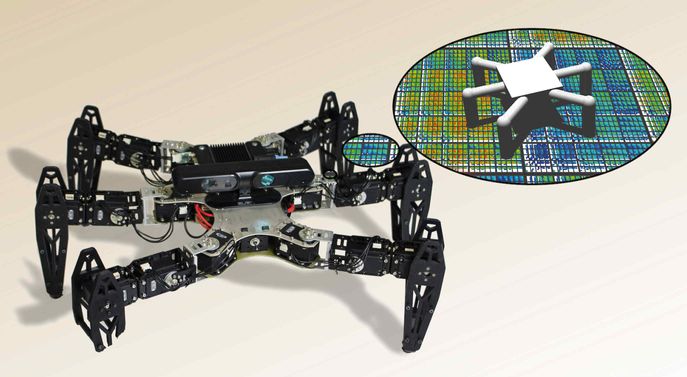

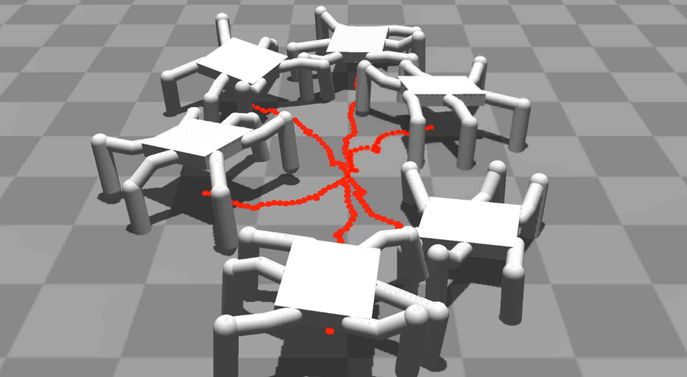

The more the complexity of robots and of their environments increases, the more it becomes challenging for engineers to anticipate all the situations that robots would have to face. In our lab, we develop learning algorithms to let robots autonomously learn how to overcome situations of mechanical damage or how to behave in a new environment. For instance, with our Intelligent Trial and Error algorithm, our robots can learn how to walk with a broken leg in less than 2 minutes.

The Intelligent Trial and Error algorithm is described in our Nature paper: Robots that adapt like animals.

Fast learning on physical robots

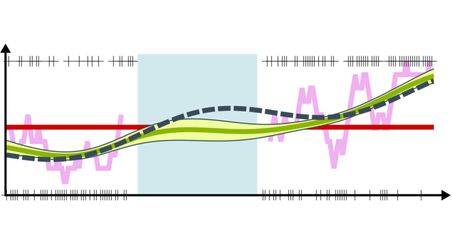

Acquiring data with physical robots is a challenge as each evaluation or trial takes a significant amount of time. This fact makes the application of most of machine learning approaches, like deep reinforcement learning, particularly difficult on physical robots. Instead of learning in the Big-Data domain, we design algorithms that works with physical robots in the Micro-Data domain. For this, we use for instance approaches that can leverage simulations and cross the reality-gap problem (sim-to-realm transfer).

For instance, the Intelligent Trial and Error algorithm uses this approach.

Research themes - 2

Learning to become versatile

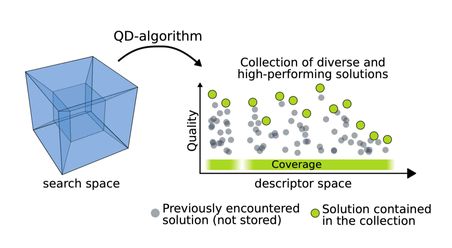

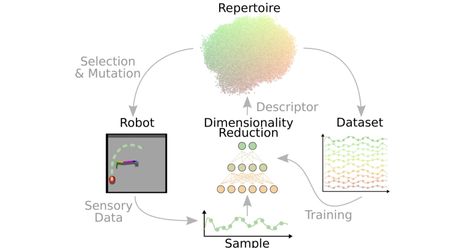

Robots need to be able to accomplish a large diversity of task, like walking, grasping objects, climbing stairs, or opening doors. It is therefore crucial to have learning algorithms that enable robots to learn all this diversity of skills. Our research uses Quality-Diversity algorithms and their strong exploration capabilities to automatically generate behavioural repertoires and to improve the versatility of robots.

To learn more about quality-diversity algorithms and behavioural repertoire you can refer to our overview paper.

Learning without the need of roboticist and engineers

When a robot is deployed in a search and rescue mission, or in the house of an elderly, we cannot expect that a roboticist or a technical expert will be available to fine tune and parametrise the learning algorithms for the robot. For this reason, our research focuses on developing learning algorithms that can be applied on different robots and for different tasks without the need of technical experts.

The AURORA algorithm is an example of algorithm that can enable a robot to autonomously discover the range of its abilities.

Algorithms and methods

Quality-diversity optimisation algorithms

Our laboratory is one of the pioneers in this new family of optimisation algorithm. The goal of Quality-Diversity optimisation is to automatically generate a large collection of diverse and high-performing solutions in a single learning process.

Deep learning

Deep learning is one of our main tools, especially to perform unsupervised dimensionality reduction and to process high-dimensional sensory data. We use in particular, variational auto-encoder and recurrent (LSTM and GRU) neural networks.

Stochastic machine learning

Stochastic machine learning, like Gaussian Processes and Bayesian optimisation are important methods for data-efficient regression and optimisation. Our robots use them for instance to find the most appropriate behaviours in a few minutes.