Learning to Solve Differential Equations: ML-Driven Splitting and Parallel-in-Time Methods

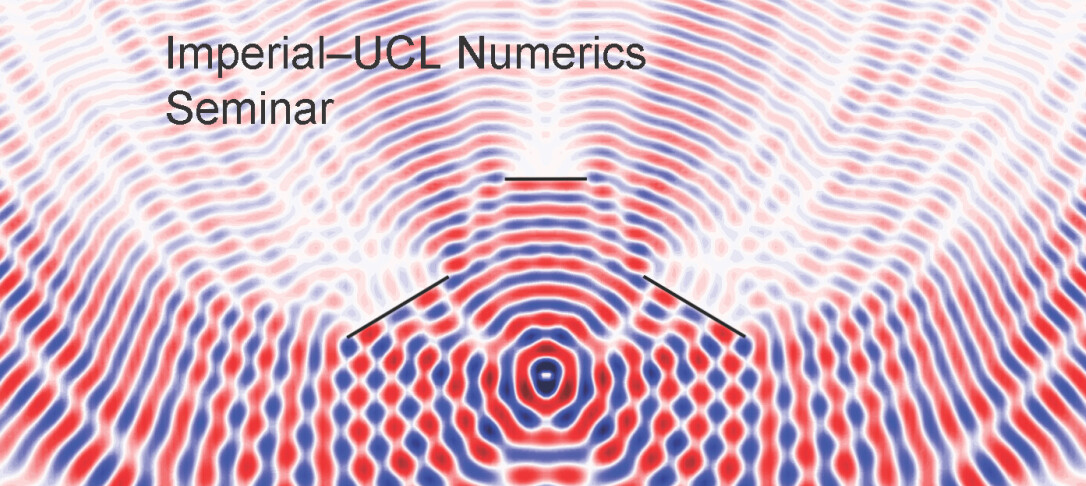

Machine learning offers new avenues for improving the numerical solution of differential equations, particularly in resource-constrained settings where traditional methods struggle. In this talk, I will discuss two recent approaches that integrate ML into numerical time-stepping schemes. The first focuses on learning splitting methods that are computationally efficient for large timesteps while maintaining provable convergence and conservation properties in the small-timestep limit. These learned methods, when applied to the Schrödinger equation, can significantly outperform established approaches under a fixed computational budget. The second approach enhances the parallel-in-time Parareal algorithm by incorporating a neural network as the coarse propagator. Through theoretical analysis and numerical experiments—including applications to Lorenz, Burgers’, and SIR equations—we demonstrate that using Random Projection Neural Networks leads to efficient training while preserving accuracy. Together, these works highlight the potential of ML to develop structure-preserving, efficient numerical solvers for differential equations.