( Website Under Construction)

Context

Deep Learning methods require large amount of data in order to train models that would generalize well in differing real-world environments. To minimise human effort, self-supervised methods can be adopted to automate the collection and annotation process of large scale datasets. Employing such a self-supervised methodology, we create the first, to the best of our knowledge, indoor flight dataset annotated with real distance labels to the closest obstacle towards three diverging directions in the field of view of the drone's forward-looking camera.

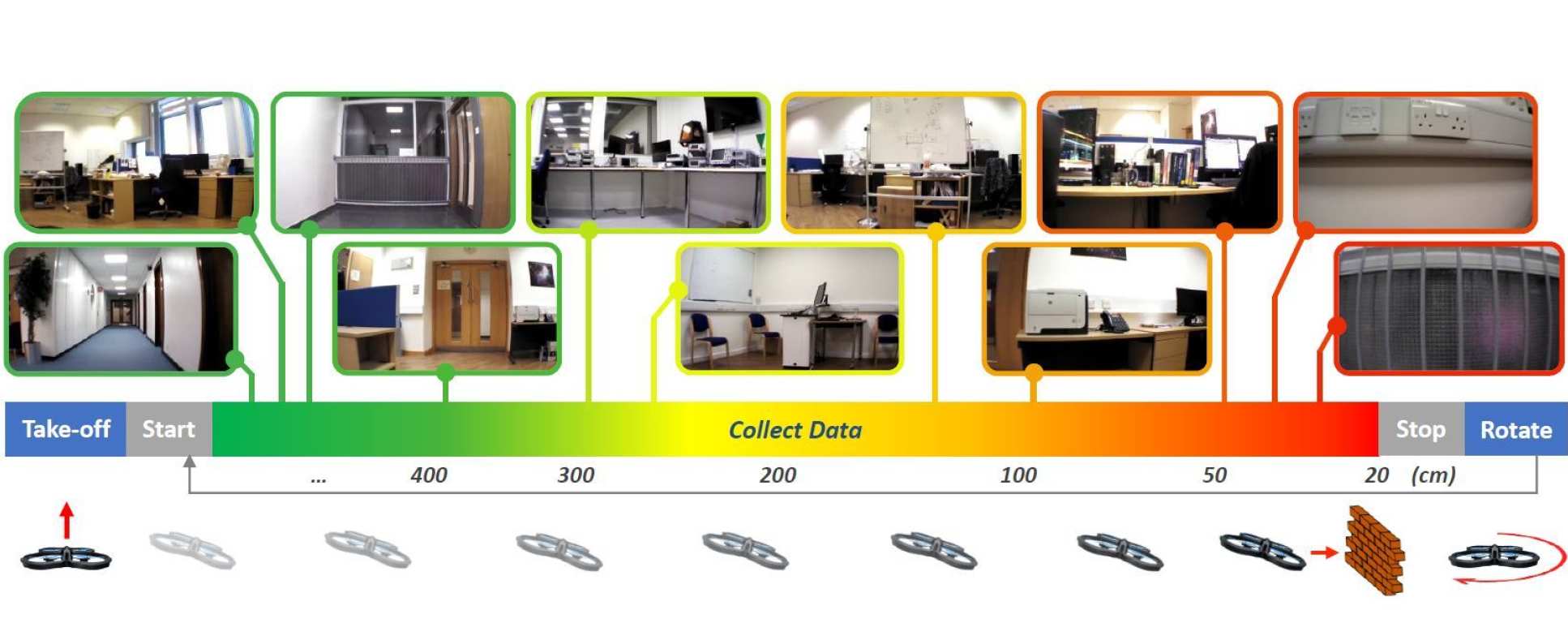

Three pairs of Ultrasonic and Infra-Red (IR) distance sensors are mounted on an UAV pointing towards different directions within its camera's field of view, to allow automatic annotation of all data samples. Since the utilised drone is equipped with a wide lens 92o camera, the selected sensor alignment is pointing towards [-30o, 0, 30o] across the image width, w.r.t. the centre of the captured frame. The drone executes straight-line trajectories in multiple environments within real buildings, while camera images are recorded along with raw data from the external distance sensors. Each trajectory terminates when the forward-looking sensor detects an obstacle at the minimum distance that the drone requires to stop without colliding. A random rotation is performed to determine the flight direction of the next trajectory, which should be towards a navigable area of the environment. This constraint is evaluated by using the external distance sensors of the UAV, minimising the human supervision requirements of the data collection process, and hence making the dataset easily extensible.

Dataset

At the moment, the following parts of the dataset, corresponding to specific indoor environemnts are made publicly available. Progressively, more environments will be added. You can download the data and annotations, by using the links provided:

| Indoor Environmnet | Number of Trajectories / Frames | Download Data and Annotations |

|---|---|---|

| Corridor | 288 trajectories, approx. 54.600 frames | click here |

Files are organised into folder corresponding to different flight trajectories during the data collection process. For each trajectory 3 .mat files with the real distance-to-collision values of each frame towards each direction are included. These values are extarcted after fusing the data-streams of all distance sensors embodied on the drone and applying numerous smoothing and denoising filters.

Citation

Please cite the following paper if you find this dataset useful for your work:

Kouris, A. and Bouganis, C.S., Learning to Fly by MySelf: A Self-Supervised CNN-based Approach for Autonomous Navigation. In 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018 (pdf, BibTex)

License

This dataset is made available by the Intelligent Digital Systems Lab (iDSL) at Imperial College London, under an Open Data Commons (ODC) Attribution License.