Insights summary

These insights relate to a pilot use of FeedbackFruits, a new tool for peer assessment of contribution to groupwork, in the Department of Life Sciences.

- Students found the tool easy to use.

- It had clear advantages for learning technologists in terms of ease and efficiency of set up and data processing.

- It isn’t possible in FeedbackFruits to force students to give different scores for each of their peers.

- Most students gave different comments for each of their peers, even if they didn’t give different scores.

Introduction and aims

In time for the start of the academic year 2022-23, the College introduced a new ICT-supported tool for peer assessment of contribution to groupwork (PACG) called FeedbackFruits to replace the open source WebPA tool because it no longer met College ICT requirements. It was tested by the EdTech Labs and ICT and selected from a range of similar tools prior to being made available across College for learning and assessment activities.

In the Faculty of Natural Sciences in recent years, the majority of PACG exercises have utilised bespoke data input and processing solutions built in Excel and Power Query. One of the PACG assessment exercises for which such a solution had been used was due to take place early in the autumn term 2022-23. It was selected as an opportunity to pilot use of FeedbackFruits with students and teaching staff.

The exercise was part of a team-based learning (TBL) activity in the second year Life Sciences module, LIFE50016 – Applied Molecular Biology - (Autumn 2022-23). A full TBL activity includes PACG in relation to the TBL tests the students complete together as a team. Students are required to give a rating score for the contribution made by each member of their team and a constructive comment about their groupwork. In LIFE50016, the peer assessment accounts for 10% of the overall mark for the TBL.

Design of the assessment using the bespoke solution

The peer scoring element of the assessment was designed to encourage students to think more deeply about the scores and comments they give to peers by asking them to differentiate between the contributions made by each member of their group. Most teams comprised 5 students and some 4 or 6. Students were asked to allocate a total of (n-1)*20 points between their team members (where n is the number of students in the team) and, importantly, were required to give a different rating score to each team member.

Students input their rating scores and comments in an Excel spreadsheet that had been manually pre-populated with student team information, which they downloaded from the Blackboard course. Then they uploaded the spreadsheet to a TurnItIn assignment in the Blackboard course.

The spreadsheets were downloaded in bulk and processed either manually or using PowerQuery to collate the feedback comments and calculate the mean rating score for each student. The mean ratings scores were then adjusted to have a similar percentage points distribution as that of the combined score of the other elements of the TBL, to produce the final peer assessment mark.

Feedback comments were shared in a spreadsheet with the academic leading the TBL activity, for review and moderation. The only moderation possible was to delete an inappropriate comment or add a teacher comment in response.

Final peer assessment marks and collated feedback comments were uploaded to Blackboard and released to the students to view.

Spreadsheet preparation, data processing and uploading and releasing of marks and feedback comments were performed by EdTech staff.

Design of the assessment in FeedbackFruits

It was not possible to replicate the scoring design above in FeedbackFruits. Instead, the peer scoring element of the assessment involved the students giving a rating of 1 to 10 for each member of their team. There was no requirement to give a different rating to each team member. The final peer assessment mark included contributions for 1) giving scores and 2) comments to peers, 3) reviewing own scores and comments, and 4) the mean rating score (weightings 15%:15%:10%:60%).

The FeedbackFruits peer assessment exercise was added to the Blackboard course for the module. There is a learning tools interoperability (LTI) integration between the two platforms, which means that in addition to staff and students being able to access the assessment from the Blackboard course, user and student group information was transferred from Blackboard and marks sent back to Blackboard.

Students accessed the assessment from Blackboard via the LTI integration and input their rating scores and comments into FeedbackFruits.

Feedback comments were shared in a spreadsheet with the academic leading the TBL activity, for review and moderation, as they had been in previous years. This was the analytics Excel file that can be downloaded by any user with a teacher role in FeedbackFruits (which is mapped to the Course Teacher, Course Admin or Instructor role in Blackboard).

The final peer assessment mark and feedback comments were released for the students to view in FeedbackFruits. The final peer assessment mark was returned to Blackboard and incorporated into the overall mark for the TBL and the overall mark was released to the students to view.

Aims of the evaluation

The aims were to assess Ed Discussion against the objectives listed in Table 1.

Table 1. Objectives, success indicators and related methods of assessment for the evaluation of FeedbackFruits for peer assessment as part of a Team-Based Learning exercise in LIFE50016

|

Objective |

Action |

Success indicators |

Metrics/methods of assessment |

|

1. To allow students to give PACG scores and constructive feedback comments to peers in their TBL team easily, and to review comments given about them easily. |

Use a tool designed for peer assessment exercises (FeedbackFruits). |

Few student requests for help with the tool. Students reporting that they found the tool easy to use. |

Number of requests for help in using the tool. |

|

2. To reduce manual processing steps required of the EdTech team in setting up and releasing marks and feedback for peer assessment exercises. |

Use a tool designed for peer assessment exercises (FeedbackFruits) that uses LTI with Blackboard and compare it with the bespoke solution using Excel and Power Query. |

Fewer manual processes required and learning technologists reporting that they found the tool easier to use that the bespoke solution. |

Informal feedback from learning technologists. |

|

3. To assess the impact of reduced flexibility in peer assessment scoring design, e.g. inability to use differential scoring to encourage deeper thinking about peer contributions and to discriminate between students. |

To compare peer assessment scores for different scoring designs. |

Low impact of reduced flexibility on the differential scoring and discrimination between student scores. Low impact on overall TBL mark. |

Distributions of scores: histograms, mean and standard deviation; median and inter-quartile range. Count of distinct scores. Distributions of TBL marks: histograms, mean and standard deviation; median and inter-quartile range. |

Methods

Scores and marks for the peer assessment task and the TBL overall were compared for academic years 2021-2022 and 2022-2023, for which the bespoke solution and FeedbackFruits were used, respectively. Scores and marks were downloaded from FeedbackFruits and from Blackboard for statistical analysis. The distributions of scores and marks were compared by plotting histograms and calculating summary statistics. The students t-test (non-paired) was used to compare TBL overall marks between academic years with a significance level of a = 0.05. Excel was used for the statistics.

A Blackboard survey was used to collect feedback from students on their experience of using FeedbackFruits for the peer assessment task in 2022-2023. It comprised three questions with 4-point Likert scale response options. It was released after the TBL exercise had been completed and peer assessment marks and comments had been released and was closed after one week. Feedback was gathered informally from the academic leading the exercise and from the learning technologists supporting the use of the bespoke solution and FeedbackFruits.

Results and Discussion

The numbers of students participating in the TBL activity and PACG exercise each year are shown in Table 2.

Table 2. Numbers of students who participated in the TBL activity in LIFE50016 and the PACG exercise

|

Academic Year |

TBL |

PACG n (%) |

Gave PACG scores n (%) |

Gave PACG comments n (%) |

|

2021-2022 |

148 |

147 (99%) |

147 (99%) |

145 (98%) |

|

2022-2023 |

169 |

168 (99%) |

157 (93%) |

137 (81%) |

All students in 2022-2023 were invited to submit feedback via the Blackboard survey. There were 20 respondents (12%).

Evaluation against the objectives

Objective 1. To allow students to give PACG scores and constructive feedback comments to peers in their TBL team easily, and to review comments given about them easily.

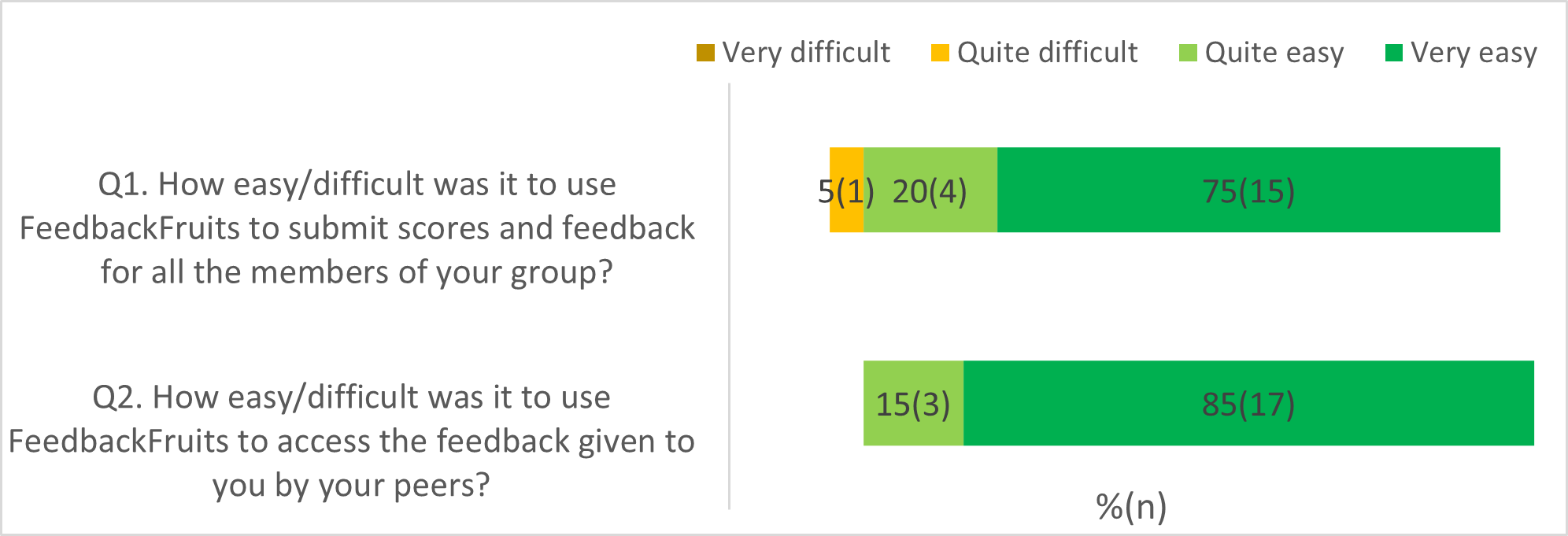

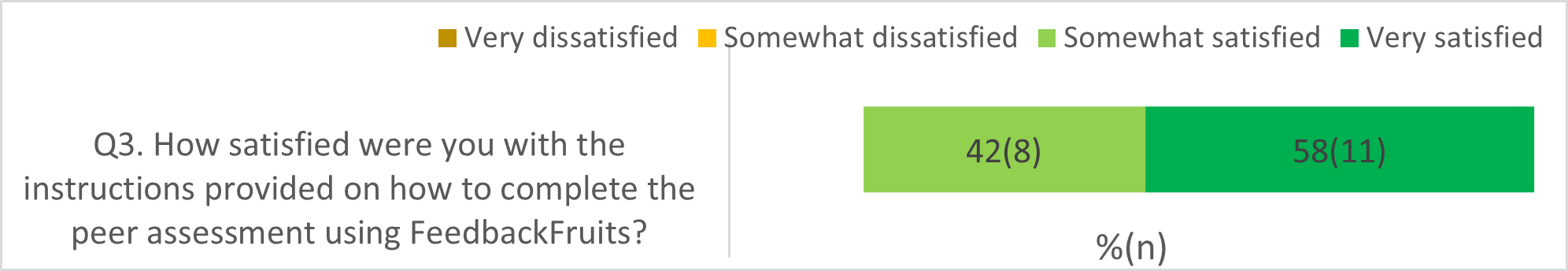

Of the 20 survey respondents:

- The majority (19) reported finding easy to use FeedbackFruits to submit scores and feedback comments about the contribution of their peers to the TBL groupwork. One student found it quite difficult.

- All reported finding it easy to access the feedback comments given to them by their peers.

- All who responded to the question about instructions (19) were satisfied with the information provided on how to complete the peer assessment using FeedbackFruits.

The results described above are shown in Figure 1.

- When asked what was good or could be better about FeedbackFruits, 7 students left comments:

- 4 were positive about the tool and its user interface and of those, 2 liked the use of replacement names to give them confidence that their feedback would be anonymous.

- 2 commented on it being unclear in the tool whether they had completed each step of the task,

- 1 reported that it didn’t work straight away

Figure 1. Students’ responses to the survey about FeedbackFruits (1 respondent omitted Q3).

Although the proportion of survey respondents was small (12%), the feedback received was very positive about the tool and its ease of use. There were minor comments about task completion indicators being desirable.

No requests for help with the tool were received from students by the EdTech team, suggesting that the cohort did not have problems using FeedbackFruits.

Objective 2. To reduce manual processing steps required of the EdTech team in setting up and releasing marks and feedback for peer assessment exercises.

The EdTech steps involved in all stages of the PACG are shown in Table 3, for the bespoke solution and for FeedbackFruits. Less time was required from EdTech staff when FeedbackFruits was used compared to the bespoke solution due to it involving fewer and less complex tasks.

Table 3. EdTech steps involved in all stages of the PACG for both solutions

|

STEPS |

Bespoke solution |

FeedbackFruits |

|

Creating data capture solution |

Create a template data capture workbook in Excel |

N/A |

|

Creating data processing solution |

Create a template data processing workbook in Excel containing Power Query scripts |

N/A |

|

Setting up as per assessment design |

Check settings in Excel data capture workbook |

Select settings in the teacher interface of the tool |

|

Adding student group information |

Manually populate in the Excel data capture workbook from a list provided by the Education Office |

Student group information (set up for the TBL) brought in automatically from Blackboard |

|

Making available to students in Blackboard course |

Upload Excel data capture workbook to Blackboard course and make available for students to download, complete and upload to a submission dropbox |

Add as a content item in the Blackboard course and make available to students |

|

Collating PACG input data |

Download the data capture workbooks submitted by the students. Add settings to the Excel data processing workbook and running the scripts in Power Query to collate the data from the student workbooks. |

Done automatically by the tool |

|

Processing PACG data |

Run scripts in Power Query to create average peer review scores, final percentage marks and collated comments from peers |

Done automatically by the tool |

|

Providing comments data to the academic for checking/moderation |

Data file shared with the academic |

Teachers can access in the tool |

|

Releasing final marks and comments to students |

Import final marks and comments, with HTML formatting into the Blackboard grade centre |

Publish in the tool. Blackboard grade centre is populated automatically |

Objective 3. To assess the impact of reduced flexibility in peer assessment scoring design

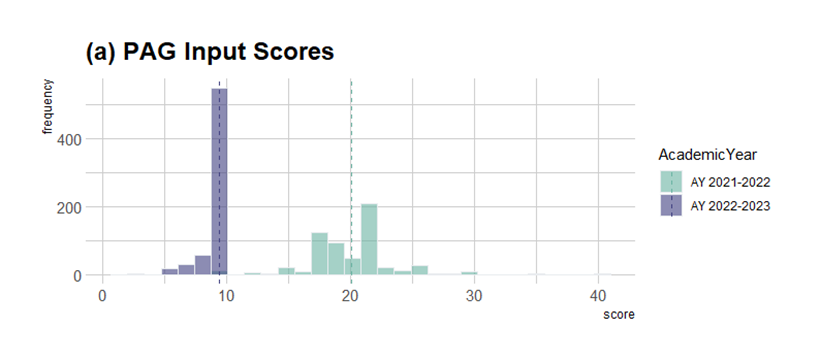

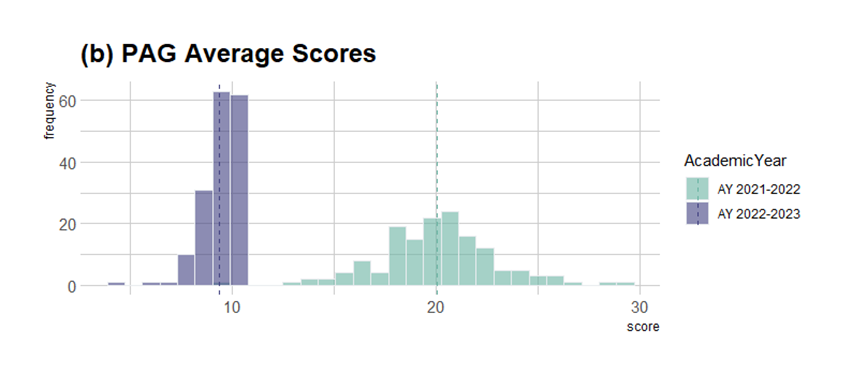

Almost all students participated in at least one aspect of the PACG exercise in both years (see Table 2). The distributions of raw and mean scores given by students to their peers for contributions to the TBL groupwork are shown in Figure 2 for AY 2021-2022 (bespoke solution used) and AY 2022-2023 (FeedbackFruits used). The distribution of raw scores for the bespoke solution was normal by design. The mean scores and final peer assessment marks also had approximately normal distributions. In contrast, the design used in FeedbackFruits resulted in negatively skewed distributions. Maximum scores were frequently given by students to their peers (70% of raw scores) and more than 30% of students received a final peer assessment mark of 100%.

Figure 2 (a), (b), (c). Histograms of (a) raw scores, (b) mean scores given by students to their peers and (c) final peer assessment marks in AY 2021-2022 and AY 2022-2023.

Figure 2 (a), (b), (c). Histograms of (a) raw scores, (b) mean scores given by students to their peers and (c) final peer assessment marks in AY 2021-2022 and AY 2022-2023.

It was not possible to assess whether the students thought as deeply about the scores and comments they gave to peers when they were not required to give differential scores. Of the 157 students who gave scores, 60% (n=94/157) opted not to differentiate scores between members of their group. Of the 158 students who left comments for their peers, 87% (n=137/158) left different comments for each team member, which was similar to the previous year (85%). Only one student did not leave comments for all team members.

The impact of the scoring design used in FeedbackFruits on the TBL overall marks was investigated by comparing the mark distributions for AY 2021-2022 and AY 2022-2023. Figure 3 shows the distributions of TBL overall marks for the two academic years and Table 4 shows the summary statistics.

Figure 3. Distributions of the TBL overall marks per academic year investigated. Dashed lines indicate the means.

Figure 3. Distributions of the TBL overall marks per academic year investigated. Dashed lines indicate the means.

Table 4. Summary statistics for the TBL overall marks expressed as percentages for the academic years investigated

|

|

AY 2021-2022 % |

AY 2022-2023 % |

|

Mean |

69.7 |

77.0 |

|

Standard deviation |

8.6 |

6.9 |

|

Min |

44.8 |

53.5 |

|

Max |

94.3 |

91.8 |

|

Range |

49.5 |

38.3 |

There was a higher mean and slightly narrower spread of TBL overall marks in AY 2022-2023 compared with the previous year, as indicated by a reduction in the range and standard deviation. An unpaired, two-tailed t-test for samples with unequal variance revealed a significant difference between the distributions of TBL overall marks for these years (p<0.001). However, this could not be attributed solely to differences in peer assessment marks because there was also a higher mean and a significant difference between the weighted sum of marks for the other components of the TBL exercise (AY 2021-22 mean = 62.6, AY 2022-2023 mean = 67.5; p<0.001).

Insights

FeedbackFruits was easy for students to use. It was more straight-forward for learning technologists to use because of the linkage between FeedbackFruits and Blackboard to transfer student group information and marks. It required less EdTech time for fewer and less complex tasks. It was easy to set up the assessment using the FeedbackFruits interface and to access the results and feedback comments for checking, although moderation options were limited.

For both AY 2021-2022 and AY 2022-2023, almost all students involved in the TBL activity participated in at least one element of the PACG exercise. The marks awarded in FeedbackFruits for giving scores, comments and reviewing scores did not result in more complete participation; in AY 2021-2022 a higher proportion of students left scores and comments for their peers than in AY 2022-2023.

It was not possible to assess whether the change in peer assessment scoring design affected how deeply the students thought about contributions of their peers to the TBL groupwork. A large proportion of students (60%) opted not to differentiate between the scores they gave to different members of their group and 70% of scores given were maximum scores. However, most students giving comments, left different comments for each of their peers (87%).

There was a narrower spread of TBL overall marks in AY 2022-2023 compared with the previous year, resulting in less discrimination between students. Significant differences in the mark distributions could not be attributed solely to the change in peer assessment design, suggesting that the impact of the change was comparable with other changes in the TBL and cohort over the two years.

The Faculty of Natural Sciences EdTech Lab plans to investigate optimal set up of FeedbackFruits to support PACG exercises with different assessment and scoring designs. This will include evaluating use of the FeedbackFruits ‘group contribution factor’, which is based on peer ratings, to weight group marks.

Read in full:

FeedbackFruits Evaluation Report

Contact us

Get in touch with the EdTech Lab and AV Support Team: