Report summary

These insights relate to the pilot use of Safe Exam Browser (SEB), a new tool used for enhanced security during assessments in on-campus ICT cluster rooms. The purpose of this evaluation is twofold; firstly, to capture the student and teacher experience of the SEB tool; and secondly to document what level of support is required by the Ed Tech Lab and ICT Digital Education and End User Computing (EUC) teams to facilitate this option. Data was collected from 6 sessions (2 pre-assessment trials and 4 assessments) across 3 modules in the Department of Life Sciences (DoLS), Faculty of Natural Sciences.

Findings

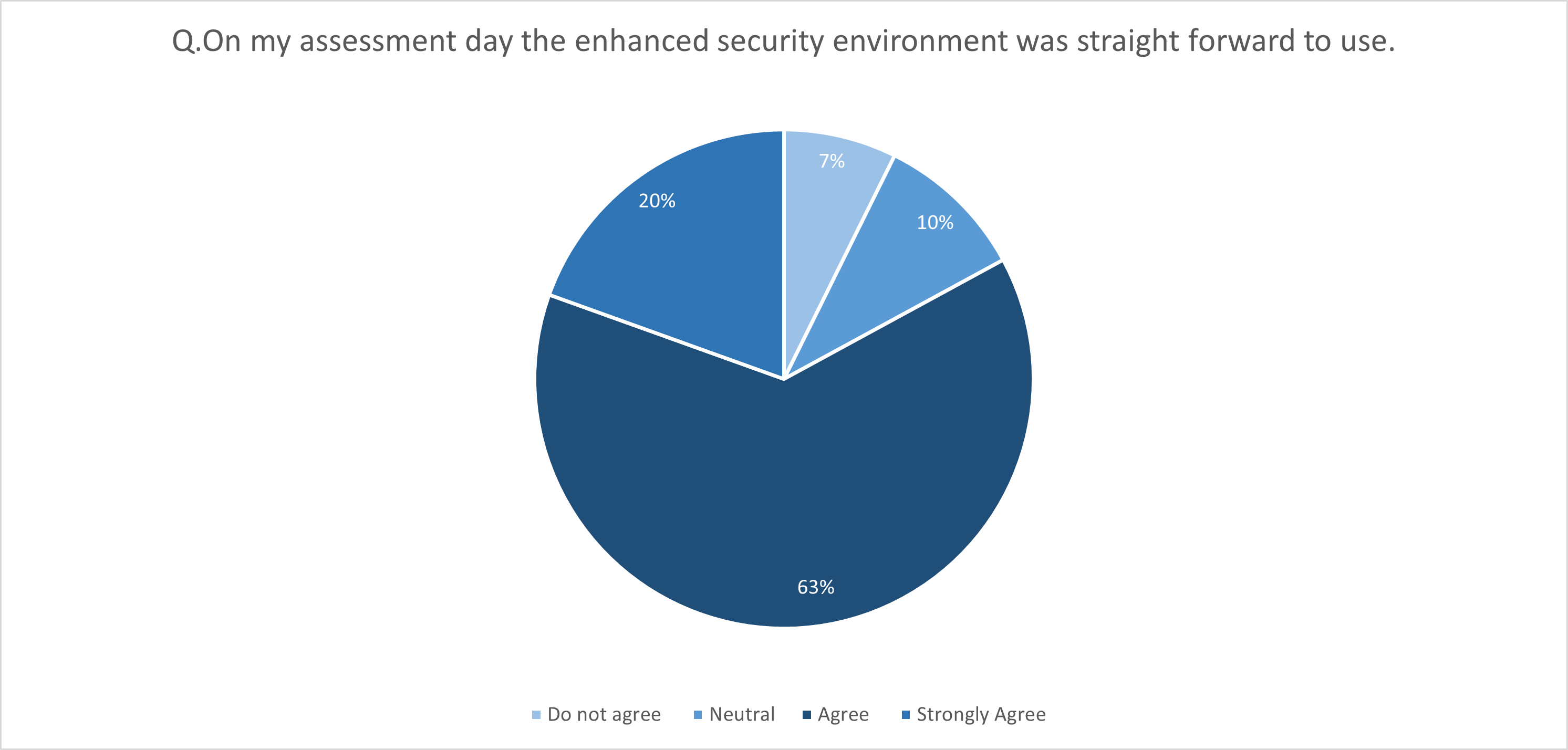

- The student survey achieved a response rate of around 14% with 41 respondents. The majority of students, 68% strongly agreed or agreed with the statement that the SEB tool was straight forward to use on the day of their assessment.

- A comparison was made between student feedback from a group that had support prior to the assessment and a group with none. There was no statistical significance to be drawn from groups receiving trials and information beforehand compared with a group receiving no prior support. The p-value is 0.477952. The significance level was set at standard p < 0.05.

- There were few issues during the assessments (<5 per session), and all could be resolved by the FoNS Ed Tech Lab with the ICT Digital Education and EUC teams; but a recommendation for future would be to have contingency in timings of the room and a surplus of computers greater than the number required.

- Student respondents that had attended a pre-session or received instructions beforehand reported that they felt prepared on the day of the assessment.

- Any additional use of computational programmes required its own set of arrangements and testing by the academic, e.g. use of R Studio.

- Difficulties with not being able to use ‘CTRL+F’ to search their notes accessed via TurnItIn and accessing R Studio files was mentioned by students in additional comments.

- Teaching staff were overwhelmingly positive about the use of SEB and highlighted the need for having ICT (EUC/Digital Education) personnel readily available in the room as a requirement for future assessment days. They also called for ICT cluster room maintenance in the morning, to be carried out prior to each assessment.

- >10 hrs additional support and set up was needed by ICT EUC/Digital Education teams and Ed Tech Lab learning technologists. This is anticipated to be reduced as streamlining of the process of using SEB increases with use.

Recommendations for using SEB for an assessment in future:

- 6 weeks’ notice to Ed Tech Lab for deploying a SEB assessment to cover collating assessment requirements and sharing with ICT; giving the three weeks required by EUC and giving enough time for the learning technologists and Digital Education to build the required resources. (Logistics around room bookings would need to be considered separately by academic as well.)

- 2 members of Ed Tech Lab in the room during the assessment.

- Support from a member ICT Digital Ed and EUC online via Teams chat during the assessment.

- 1-2 Invigilator and the Academic present in room. With the caveat to double up if >100 students.

- Access to room pre- and post- the assessment timings for contingency.

- Continue to always offer familiarisation sessions to new users.

- Students to continue logging in to all resources with their own Imperial credentials.

Challenges with future deployment

- ICT advise current level of resource and in-person/Teams support is available for pilot phase only.

- EdTech support will be difficult to sustain at this level, especially if the number of staff in the FoNS Ed Tech Lab is reduced due to non-continuation of current fixed-term contracts.

- ICT cluster room maintenance of PCs is paramount; and if SEB is being used for high-stakes assessments it needs prior room checks and ideally done on day of assessment.

_________________________________________________________________________________________________

Introduction

In DoLS tests completed in Blackboard Learn while the students are sitting in computer cluster rooms have been used as part of assessment for many years. For some modules this accounts for 50% of the overall coursework mark. Staff and students have been raising concerns about the level of validity and deterrence available in this scenario to prevent cheating despite invigilators. Imperial is currently investigating multiple lockdown assessment solutions that can be deployed to cluster PCs, and the locked browser environment provided by SEB was seen as a viable solution for the requirements of DoLS. Therefore, a pilot was agreed across multiple modules in the 2023-24 academic year.

What is Safe Exam Browser?

Safe Exam Browser is a secure web browser environment that enables us to carry out online assessments when installed on a computer; so, when activated, SEB turns an Imperial cluster PC into a “workstation with enhanced security”. It controls access to resources like system functions, other websites and applications and prevents unauthorized resources being used during an assessment. The ICT Digital Education and EUC team must deploy a bespoke configuration matching the requirements of the assessment onto Imperial cluster machines before the assessment takes place; and deploying the SEB application on the cluster machines is done once and then updated on an ongoing basis.

Aims of evaluation

The purpose of this evaluation is twofold; firstly, to capture the students and teacher experience and impact of introducing the SEB tool; and secondly to document what level of support is required by the Ed Tech Lab and ICT Digital Education and EUC teams to facilitate this option. The objectives listed in Table 1 define what SEB was evaluated against.

| Objective | Action | Success indicators | Metrics/methods of assessment |

|---|---|---|---|

| 1. To allow students using SEB access to relevant material and software needed for the assessment excluding anything else e.g. internet. | Use a tool designed for assessment exercises (SEB). |

|

|

| 2. To enable academics to set up assessments with enhanced security without increasing workload. | Use a tool for the assessment exercise and compare it with when no tools were used. |

|

|

| 3. To assess the impact of introducing a “non-assessment related“ software in a test situation. | To compare student experience between a group that received trials and information prior to assessment and a group that received no pre-assessment support. |

|

|

Table 1. Objectives, success indicators for the evaluation of extended security used for assessment in LIFE40006/40008/60048

Design of an assessment using the Safe Exam Browser

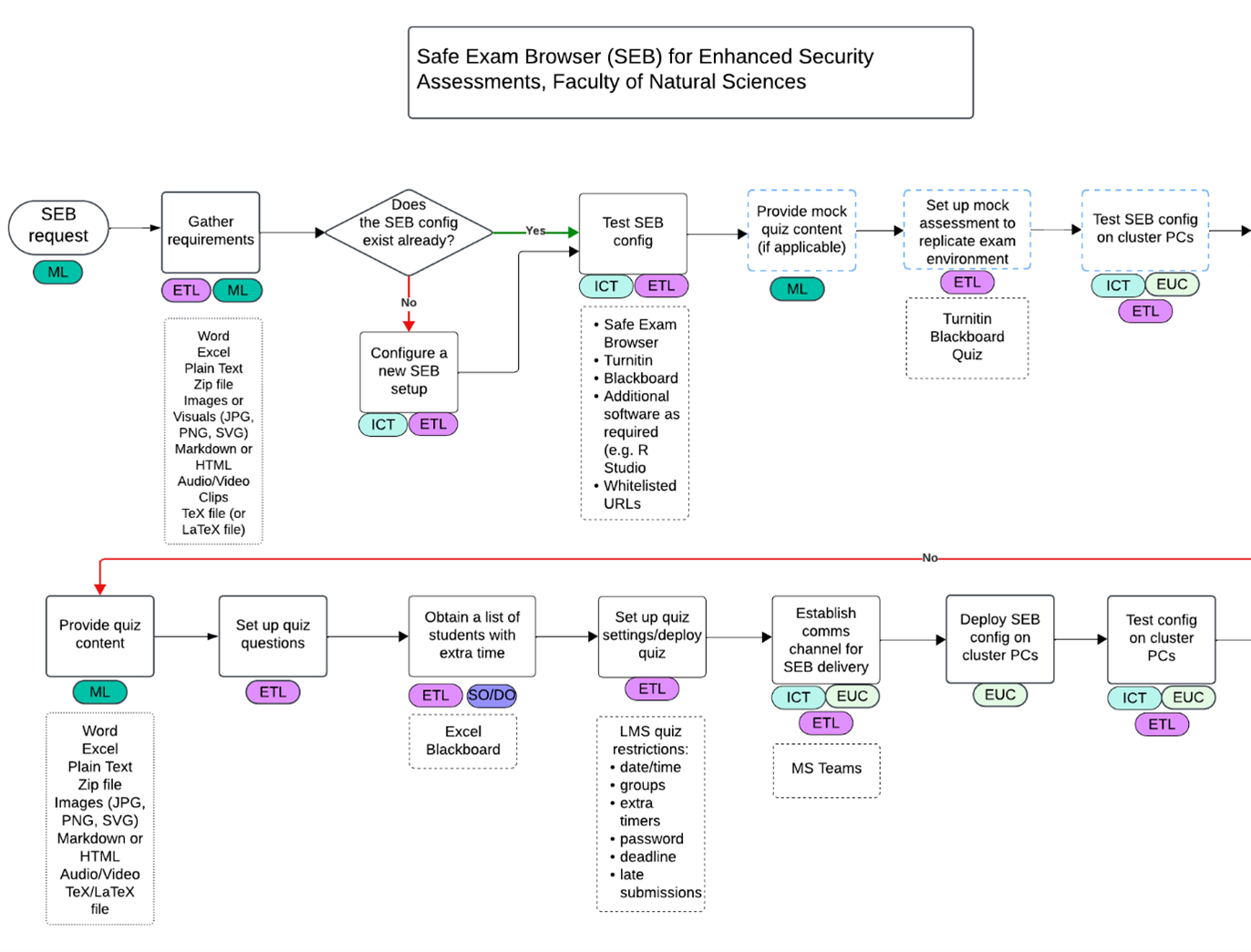

Figure 1. Extract from SEB set up process map, see full version in Appendix C.

As seen from Fig .1 (an extract from process map, see more details in SEB Evaluation Report Appendix C), there are several teams and steps involved with a SEB assessment setup. Broadly speaking there are numerous steps taken by the teams to gather the requirements and build a configuration, then that set up and configuration of an assessment is tested, followed by academics checking the config in the room and finally steps are also taken to ensure students become aware/ familiar with using SEB. Therefore, when the actual assessment day runs it is as smooth as possible with strong collaboration between ICT, EUC and ETL and as many issues as possible are addressed or mitigated.

The Table 2 below describes the context of this tool trials, assessment days and the participants that took part between November 2023 – June 2024.

| Student group | Module Cohort size | Cluster Room | Scenario and Technical Environment | Trial /Information provided | Trial/Assessment Participants |

|---|---|---|---|---|---|

| LIFE60048 (third year Life Sciences students) |

50 | SAF G27 | Blackboard | No trial session or MFA information; limited scoping with teacher | 50 |

| LIFE40008 (Y1 Biological Sciences students) |

150 | SAF G27 and SECB 310/311 | Blackboard & Turnitin | Cluster room practice submission was available | 139 |

| Life Sciences Y1 (all Biological Sciences and Biochemistry) |

340 | SAF G27 and SECB 310/311 and CHEM 135 |

Blackboard, MS Excel, Turnitin/PDF student lecture notes Session ran twice back-to-back |

Cluster rooms practice submission after Multi Factor Authentication information emailed for the half of the cohort who did not take the LIFE40008 assessment (i.e. Biochemists) | 289 |

| Life Sciences Y1 (all Biological Sciences and Biochemistry) |

340 | SAF G27 and SECB 310/311 and CAGB 203 |

Blackboard, MS Excel, R Studio, Turnitin/PDF student lecture notes Session ran twice back-to-back |

Majority been on trial and already seen MFA information | 288 |

Table 2. Trial venue, participant data summary between November 23-June 24

The actual trials and assessment exercises differed between the groups:

- The LIFE60048 students were asked to access a Blackboard test; but the requirements on the day were changed by the teacher who also wanted them to access course notes (PowerPoint files) during the test.

- The LIFE40008 cohort were asked to access a Blackboard test and to utilise additional files in PDF format that were submitted into TurnItIn in the SEB environment.

- The whole of the Life Sciences first year cohort (which includes the LIFE40008 students) were asked to access a Blackboard test, utilise files in PDFs via the same workflow and also to access Excel for the assessment.

- The same first year cohort then had the same needs but in addition needed to use R Studio and to access to relevant files in that programme.

The preparation and familiarisation information provided to students before the assessments also differed between groups:

- The LIFE60048 students were talked through accessing SEB at the start of the assessment as part of the standard introduction the FoNS Ed Tech Lab provides for in-room Blackboard tests.

- The LIFE40008 students all attended a familiarisation session where they were taken through accessing SEB, a Blackboard test and TurnItIn in that environment; and were also encouraged to access a website or tool of their choice which was inaccessible in SEB to prove it could not be “hacked”.

- The Biochemistry half of the Y1 cohort were offered with same familiarisation session as the LIFE40008 students, but with additional information about Imperial multi-factor authentication (MFA) sent to them before that session as use of this had resulted in several problems for the LIFE40008 cohort.

For the LIFE60048 and LIFE40008 cohorts the FoNS Ed Tech Learning Technologists logged in to all the cluster PCs that would be used with a generic assessment username and password provided by Digital Education. This meant that from the point of sitting down at the PC to logging into SEB with their own username and password the students could not access any resources or saved files, creating an experience closer to a high stakes assessment where they don’t have access to any resources or communication tools once they enter the assessment room. However, after discussing with the teachers who were responsible for the two Life Sciences Y1 assessments they did not feel this level of security was necessary and were happy for the students to log-in to the PC with their own usernames and passwords with the access that enabled as this would end once the SEB session and the assessment began.

Methods

Notes were made after each session by ICT Digital Education and the Ed Tech Lab learning technologists supporting the implementation of SEB. An issue tracker was kept (SEB Evaluation Report Appendix A).

Feedback was also gathered via email from the academics leading the modules.

All students in the modules were invited to submit feedback via a MS Form survey link available either via email or by scanning a QR code in the cluster room. The form included branching depending on the day/cohort and a variation of three questions with a 5-point Likert scale. It was released after the trials and assessments had been completed and was closed after two weeks.

Thematic analysis was carried out on the qualitative data received from staff and students. Excel was used for statistical analysis of the survey data. Feedback responses were checked for statistical significance between the group receiving pre-assessment trial sessions/ guidance versus the group with no pre-assessment support, at the standard of significance level of p = 0.05.

Results and Insights

Objective 1. To allow students using Safe Exam Browser access to only relevant material and software needed for the assessment.

Academics noted that in the first round of assessments (LIFE60048 and LIFE40008) SEB was not working on all the machines in the cluster rooms. Also, it was often the case that several PCs in each room were not working at all (e.g. would not power up) so having a greater number of computers than students proved critical as the rooms were often very close to capacity. This meant checking of the PCs before the assessment was vital; and checking of the rooms at the start of the assessment day was also booked with ICT First Line Support to ensure the highest number of machines possible was available.

No comment was made from teaching staff about any effect resulting from the change from using a generic assessment account to pre-log in the cluster PCs before the students arrived (LIFE60048 and LIFE40008) to the students logging in to the PCs with their own credentials (both Life Sciences Y1 assessments).

Learning technologists supporting the trials and assessments noted a range of deployment issues that then were flagged and addressed by relevant teams, with every subsequent use of SEB:

During Trial sessions:

- LTI tools (Ed discussion, Leganto) had issues when set to open in a new window. TurnItIn (TII) assignment inbox failed to open in a new window.

- SEB deployment was not in place at approximately 13:05 for one session at 14:00.

- Trail session 1: two out of 29 PCs in SECB 310 were not working.

During assessment days:

- Six out of 80 machines in SAF G27 were not working.

- For LIFE60048 students couldn't access PowerPoint files due to SEB restrictions (this requirement was not requested in advance but was then requested by the academic during the assessment).

- Wrong configuration file deployed (Medicine's config used instead of the correct one).

- SEB wouldn't launch on some cluster PCs (2 in SAF G27, 5 in SECB 310/311).

The student survey (see SEB Evaluation Report Appendix B) achieved a response rate of around 14% with a total of 41 out of 289 potential respondents. The results from the survey aligned with observations in the room on ease of use, so we can be confident that they are representative.

| Overall Response Rate | ||

|---|---|---|

| Cohort size | Trial and assessment participants | Survey respondents |

| 340 | 289 | 41 |

Table 3. with survey response rate

The majority of respondents (63%) reported that SEB had been straight forward to use on the day of their assessment. No access issues were mentioned in additional comments. Comments related to R Studio and using CTRL+F within a TurnItIn document.

Figure 2. Students’ responses to the survey about enhanced security using SEB on assessment day.

Objective 2. To allow academics to set up assessments with enhanced security without increasing workload.

As mentioned earlier the academics would prepare and provide the assessment requirements to the Ed Tech Lab as part of any assessment procedure. In addition, they would also be required to support test deployment at some level prior to the assessment day. Overall, we received no comments from academics about SEB that would suggest any increase in workload was felt with these added steps.

The FoNS Ed Tech Lab reiterated the importance of best practice for running assessments in computer rooms:

- It is best if extended time students are accommodated in one room.

- Assessment in progress signs are useful to avoid unnecessary interruption by the wider Imperial community wanting to use the cluster rooms.

- It is essential to have a member of teaching staff in attendance to answer questions on the content of the assessment and to make decisions on scenarios that might lead to mitigating circumstances (e.g. should a student be allocated extra time to complete the test in Blackboard because they had to swap PCs).

- Having the cluster PCs checked before an assessment to ensure the highest number of working machines is very important. ICT have now added a form in ASK to request room checks by the first line support team on assessment days.

They also noted some practical lessons learnt and recommendations for running assessments using SEB:

- Meeting with the academics within agreed timeframe to confirm their assessment requirements and agreeing these could then not be changed is essential, as was testing the SEB config with the academic once it was ready.

- Having a MS Teams chat to support each other across multiple rooms and call on ICT support proved to be a good move as issues were resolved swiftly. It was also beneficial to use this chat to provide updates and resolve questions during the set-up of each session.

- There were fewer students queries to troubleshoot on the assessment day when the students had completed a familiarisation session before the assessment.

- Logging in and out of 80 machines with the assessment account was labour-intensive; and the sessions were more manageable when students logged in with their own credentials. Therefore, assessment accounts should only be used if there is an explicit requirement for that level of security by the teacher/department.

- It is still a lot of work (20 minutes for two people to close an 80 PC room) to end the sessions as the learning technologists have to enter the unlock password to end a SEB session because this is not distributed to the students in order to ensure security: therefore, all SEB session have to be terminated manually using the password and the students then logged out from each cluster PCs.

- A process could be explored where ICT uses a remote login method to start /switch off all machines, hence students won’t use their credentials. But this would create more work for EUC and increase in need for troubleshooting on the day.

- Alternatively, the lock and unlock password could be removed from the SEB session but this would greatly reduce security: students could leave the secure environment to check their own resources on the PC and then log back in with no restriction.

The feedback from Digital Education and EUC was as follows:

- EUC Deploying the SEB application and keeping it updated across Imperial cluster PCs is not a notable piece of work with any difference to many other applications.

- Digital Education building the config file for an assessment can take up to a week; and if the new config requires web or desktop applications that they haven't used before then it can take longer. Therefore testing/sign-off by the academics/staff requesting the assessment must be done in a timely manner so highlighted issues can be addressed or the desired config deployed.

- For example, some web applications require lots of additional authentication URLs to be whitelisted and that can take some time to parse and needs more thorough testing. This is a considerable piece of set up from the Digital Education Team for one assessment: even with a SEB config that is re-used from a previous year lots of manual editing can be required to ensure it is still viable as URLs to resources can change.

- Once Digital Education have built the config file EUC noted that deploying it to cluster PCs was a much quicker part of the process (half a day) with testing taking the most time; and was faster when fewer changes were required to a file.

- Although EUC were able to amend an incorrect deployment of SEB very quickly in assessments mentioned earlier;, they request three weeks’ notice to deploy a SEB config file for use in an assessment. This would allow for testing software deployments/configuration as required, but a slightly more significant part is scheduling of staffing resource. (This timeframe is more than the two weeks the learning technologists would request to set up an assessment where SEB was not used.)

- If multiple different SEB assessments need to run in the same room on the same day this requires a lot of support from EUC to manage the deployment and control the risk of error.

- For helping with scheduling – being able to display dates and rooms being used for assessments (for FoNS and other depts) would be hugely beneficial and avoids confusions.

- SEB is an open-source piece of software so no outside or vendor support is available if ICT encounter problems with the application.

Objective 3. To assess and compare the impact of introducing SEB in an assessment between a group with trial/information and another with none.

Academics valued the trial and information provided to students beforehand.

“I can tell you straightaway that the training sessions were necessary and well done. Next year we will schedule them in from the start so that they run smoothly in terms of getting the students in and out. The main stumbling block is the working (or non-working) status of the machines.”

The EdTech staff also saw a reduced level of issues and queries on assessments day from students who had attended a trial, especially with support required for multi-factor authentication queries.

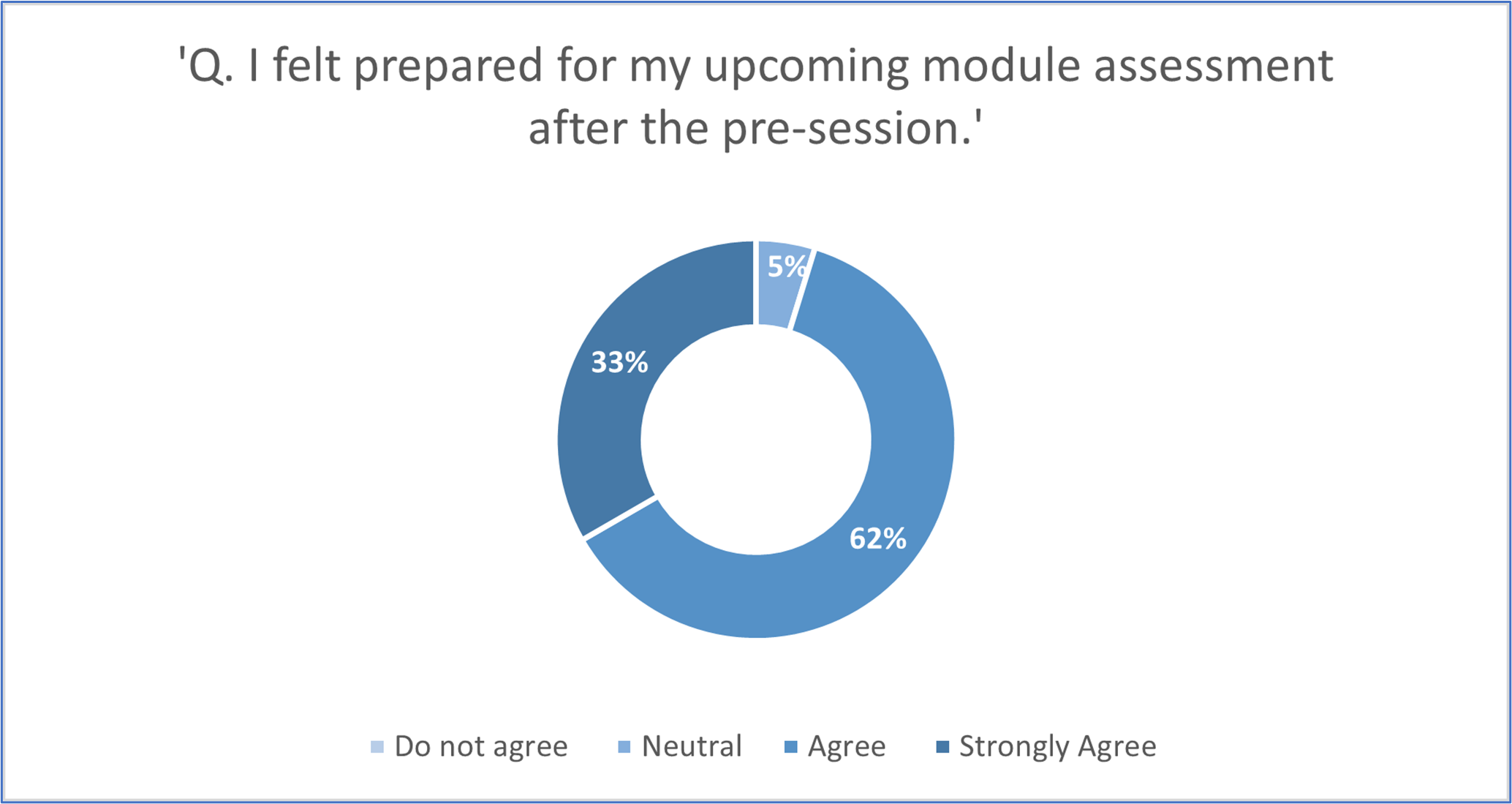

Figure 3. Students’ responses to the survey about enhanced security using SEB after a pre-session.

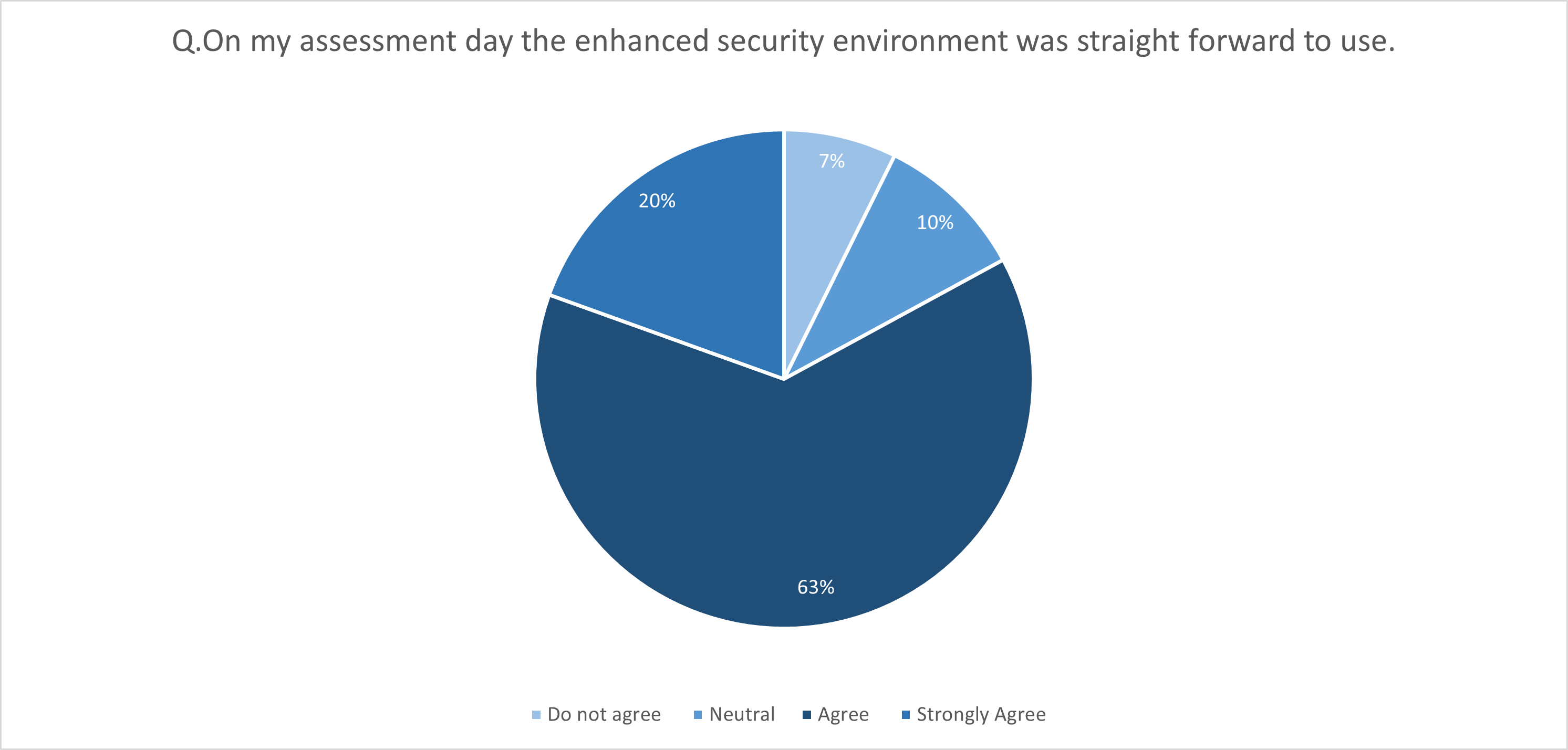

Figure 4. Students’ responses to the survey about enhanced security using SEB after an assessment.

Of the 41 survey student respondents:

- From those who attended a familiarisation session (group A) all but one felt prepared for the day of the assessment. Two other respondents gave a neutral response.

- From students who did not attend a familiarisation session (group B) all but two felt prepared for the day of assessment too. Four students responded neutrally.

- Regardless of group, the majority of respondents (63%), reported that SEB had been straight forward to use on the day of their assessment.

- The only respondents (see Fig. 4) that disagreed to the statement of ease of use and struggled with the SEB on assessment day had not attended any pre-sessions. Only 1 student who had attended the pre-session trial and received information disagreed with ease of use but referred to R Studio and not SEB in their comments when elaborating on issues.

| Observed values | Don't Agree | Strongly Agree/Agree | Neutral | Respondents |

|---|---|---|---|---|

| Group A | 1 | 18 | 2 | 22 |

| Group B | 2 | 14 | 4 | 19 |

| Totals | 3 | 32 | 6 | 41 |

Table 4. A/B Group survey responses comparison

Our objective and comparison between groups was defined through the following hypothesis for the statistical analysis:

Hypothesis (a): Students who attend a pre-assessment trial and read information will find the Safe Exam Browser easy to use on the day of the assessment.

The significance level was set at standard p < 0.05. A chi-square test showed that there was no significant association between students who received additional support by attending a pre-session and ease of use of Safe Exam Browser X2 (1 N = 41) = 1.4, p = .47. One probable reason is that our sample may be too small to draw any conclusions. Another interpretation could be that SEB is very intuitive for our students and a small number need additional support.

Conclusions

Despite the small sample size for the survey the student response towards SEB has been positive. It also seems that SEB did not add any considerable workload burden for the academic staff. The Ed Tech Lab and academics did find the trials useful to surface issues but also allowing students to familiarise themselves to SEB leading to fewer queries on assessment day. The Ed Tech Lab, Digital Education and EUC teams have a substantial role to support SEB assessments and there is probably a need for wider Imperial discussions around scalability and sustainability of this solution being that it is open source as well. To keep resource requirements at a reasonable level there are several adjustments that can be made already.

Below are some recommendations for running SEB for an assessment going forward:

- 6 weeks’ notice to Ed Tech Lab for deploying a SEB assessment to cover collating assessment requirements and sharing with ICT; giving the three weeks required by EUC and giving enough time for the learning technologists and Digital Education to build the required resources. (Logistics around room bookings would need to be considered by academic as well.)

- Have 2 members of Ed Tech Lab in the room

- 1-2 Invigilators and the Academic should also be present in the room. With the caveat to double up if >100 students.

- Additional support online via Teams chat from members of ICT Digital Education and EUC teams.

- Students logging in to all resources with their own Imperial credentials is preferred.

- Contingency planning in form of timings and surplus of computers than required for assessment.

- Familiarisation sessions for new users and pre-information sent via email to continue.

Read in full:

Contact us

Get in touch with the EdTech Lab and AV Support Team: