Imperial and MIT explore how our future could be shaped by AI

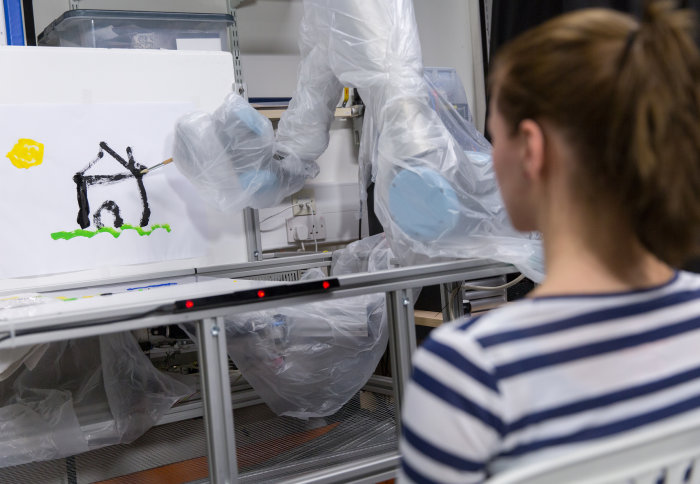

Technology developed by Imperial's Dr Aldo Faisal allows a user to control a robotic arm with eye commands to paint a picture

World leading experts in artificial intelligence convened in London to discuss the potential for intelligent machines to transform our lives and work.

Systems that Learn, a conference hosted by Imperial Business Partners, MIT Industrial Liaison Program, and BT Group, brought together world-renown academics from Imperial and MIT, together with industry leaders and start-ups, to explore how key advances in AI and machine learning could transform human efforts in areas as diverse as disaster recovery, cybersecurity, and healthcare.

Imperial Business Partners and the MIT Industrial Liaison Program seek to strengthen ties between their respective institutions and business leaders, industry and corporate partners. Imperial is deepening its connections with MIT. The two world-leading institutions recently launched a student exchange which will provide research as well as educational opportunities to students at both institutions.

Collaborating with robots

Professor Nick Jennings, Imperial’s Vice Provost (Research and Enterprise) and Chair in Artificial Intelligence, spoke about how humans and machines must work together in an increasingly data driven world.

Professor Jennings said: “We need to build effective and cooperative partnerships between humans and AI systems, recognising that there is complementary expertise on both sides.”

After the Nepal earthquake, Professor Jennings and his team worked with disaster recovery charity Rescue Global, where teams of humans and machines were able to determine the best placement of water filters around Kathmandu.

Speaking at the event, Professor Jennings said: “After a major disaster, a huge volume of information comes in from many different channels. This information can often be incomplete or contradictory.”

“AI can be used to analyse this information to identify potential casualties and need. Humans on the ground can then add trusted observations of the situation, and the AI will update its assessment. Together, they jointly monitor the complex operation.”

Cyber arms race

Dr Una-May O’Reilly, Principle Research Scientist at the MIT Computer Science and Artificial Intelligence Laboratory, spoke about her research into the use of machine learning to protect against cyber-attacks.

Dr O’Reilly said that we are locked in what she describes as a “cyber arms race.” As we take security measures to counter network attacks and other malware, the perpetrators develop and exploit unanticipated variations of these attacks that get the better of them, she explained.

Her research approaches the problem using machine learning techniques, modelling this conflict to predict attacks and allow us to build more secure systems that are adaptive to changing threats.

The human strategy

Professor Alex Pentland, co-creator of the MIT Media Lab and founder of the MIT Connection Science and Human Dynamics Labs, spoke about his work using big data and advanced analytics to better understand human behaviour.

He discussed how we might use the ever-growing amount of data about all aspects of our lives to understand how we could better organise companies, public health, and governance by predicting the way humans will behave.

His team from the Human Dynamics Labs at MIT recently won first place in three categories in the Fragile Families Challenge, which tasked teams to develop statistical and machine learning models that could predict important outcomes in the lives of children, based on a trove of data about children, their parents, their schools, and their overall environments.

Atlas of behaviour

Dr Aldo Faisal, Senior Lecturer in Neurotechnology at Imperial, is seeking to gain a data-driven understanding human behaviour through machine learning to develop diagnostic technologies, predict human intentions from the behaviour and control novel robotic devices.

His team used advanced body sensor networks to measure eye-movements, full-body and hand movements of more than 40 humans living in a studio flat to develop what he calls an “Atlas of Behaviour.” These insights into human movement can be used to develop robotics that can mimic realistic human motions and activities.

Dr Faisal and his team have developed computer software that enables a user to control a robotic arm with eye commands to paint a simple picture, as well as an algorithm-based decoder system that enables wheelchair users to move around by looking to where they want to travel.

He is currently developing and testing a bodysuit that measures movements during everyday life in a number of boys with and without Duchenne muscular dystrophy, to measure how their body interacts with the world around them. The bodysuit will feed data back to the research team in real time, where they will use artificial intelligence to make sense of data patterns and determine whether any new treatment regimes are working. From there, doctors can make better informed decisions on treatment.

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Deborah Evanson

Communications Division