A Real-Time Gamma Probe Tracking System for Cancer Tissue Identification

Our Hamlyn researchers proposed a real-time gamma probe tracking system integrated with augmented reality, aiming to aid surgery for prostate cancer.

One of the main treatment options for prostate cancer, the most common cancer in UK males, is carrying out surgery.

Minimally invasive surgery (MIS) including robot-assisted procedures are increasingly used owing to its significant advantages, such as reducing the risk of infection and trauma to the patient’s tissues.

However, due to the lack of reliable intra-operative visualisation, complete cancer resection and lymph node identification remain as challenges. In other words, making a clear distinction between cancerous and non-cancerous tissue is an arduous task for surgeons. Until today, many surgeons still rely on their naked eye and sense of touch to detect where the cancer is located in the tissue.

Recently, endoscopic radio-guided cancer resection has been introduced where a novel tethered laparoscopic gamma detector can be used to determine the location of tracer activity, which can complement preoperative nuclear imaging data and endoscopic imaging.

Nevertheless, these probes do not clearly indicate where on the tissue surface the activity originates, making the localising detection of the sensing area difficult, as well as increasing the mental workload of the surgeons.

A robust real-time gamma probe tracking system integrated with augmented reality

In light of this, our researchers at the Hamlyn Centre collaborated with Lightpoint Medical Ltd to propose a robust real-time gamma probe tracking system that integrated with augmented reality. The proposed system features a new hybrid marker which incorporated both circular dots and chessboard vertices to increase the detection rate.

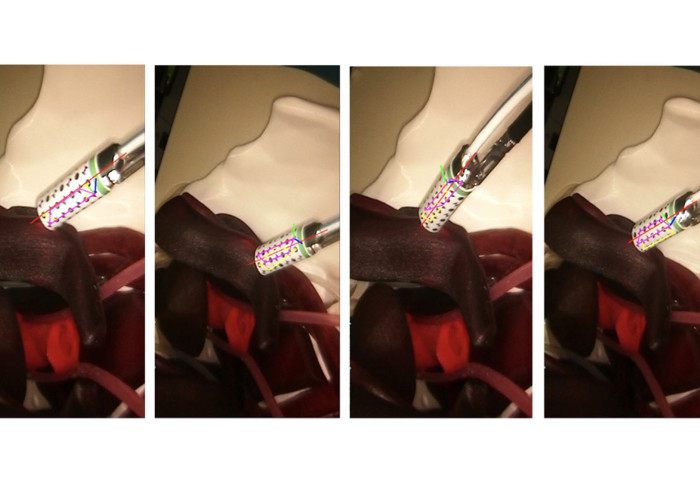

A dual-pattern marker has been attached to the gamma probe, which combines chessboard vertices and circular dots for higher detection accuracy. The additional green stripe was included to introduce asymmetry and resolve direction ambiguity.

Both patterns are detected simultaneously based on blob detection and the pixel intensity based vertices detector and used to estimate the pose of the probe. In addition, temporal information is incorporated into the framework to reduce tracking failure.

Moreover, our researchers utilised the 3D point cloud generated from structure from motion to find the intersection between the probe axis and the tissue surface.

When presented as an augmented image, this can provide visual feedback to the surgeons. The proposed method has been validated with ground truth probe pose data generated using the OptiTrack system.

In conclusion, the experimental results showed that this dual-pattern marker could provide high detection rates, as well as more accurate pose estimation and a larger workspace than the previously proposed hybrid markers.

Furthermore, our researcher demonstrated the feasibility and the potentiality of using the proposed framework to track the ‘SENSEI®’ probe (a miniaturised cancer detection probe for MIS, developed by Lightpoint Medical Ltd).

In addition to the design of the new marker, our research team also proposed a solution to provide clear visual feedback for indicating the tracer location of the affected lymph nodes or tumour on the tissue surface by utilising augmented reality techniques.

This research was supported by the UK National Institute for Health Research (NIHR) Invention for Innovation Award "A miniature tethered drop-in laparoscopic molecular imaging probe for intraoperative decision support in minimally invasive prostate cancer surgery (NIHR200035)", the Cancer Research UK Imperial Centre and the NIHR Imperial Biomedical Research Centre. (Baoru Huang, Ya-Yen Charlie Tsai, João Cartucho, Kunal Vyas, David Tuch, Stamatia Giannarou and Daniel Elson, "Tracking and visualisation of the sensing area for a tethered laparoscopic gamma probe", International Journal of Computer Assisted Radiology and Surgery, 15:1389–1397, June 2020).

Article supporters

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Erh-Ya (Asa) Tsui

Enterprise