VisionBlender: A Tool to Generate Computer Vision Datasets for Robotic Surgery

Our Hamlyn researchers won the outstanding paper award at the MICCAI workshop by introducing a novel tool for generating accurate endoscopic datasets.

Surgical robots rely on robust and efficient computer vision algorithms to be able to intervene in real-time. With clear and accurate datasets, surgeons are able to precisely move surgical tools in reference to the deforming soft tissue.

However, the main issue is that training or testing of such algorithms, especially when using deep learning techniques, requires large endoscopic datasets. Obtaining these large datasets can be seen as a challenge task as it requires expensive hardwares, ethical approvals, patient consent and the access to hospitals.

VisionBlender: A Tool to Efficiently Generate Computer Vision Datasets

In view of this, our researchers at the Hamlyn Centre introduced a novel tool, VisionBlender, that is capable to generate large and accurate endoscopic datasets for validating surgical vision algorithms.

In view of this, our researchers at the Hamlyn Centre introduced a novel tool, VisionBlender, that is capable to generate large and accurate endoscopic datasets for validating surgical vision algorithms.

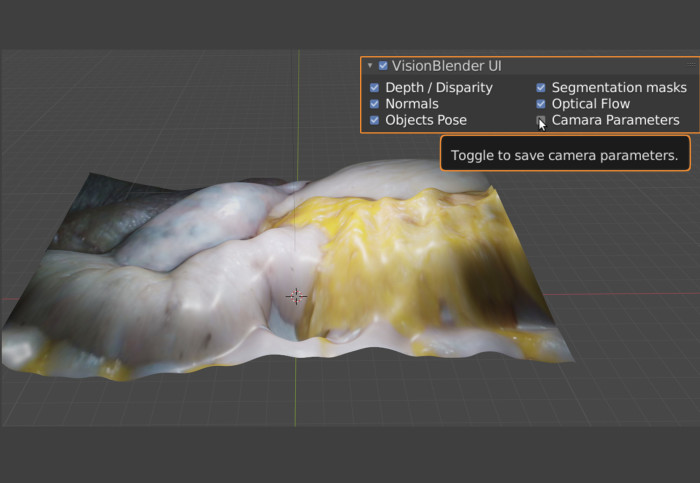

VisionBlender is a synthetic dataset generator and is specifically built for assisting robotic surgery. By adding a user interface to Blender, this tool allows users to generate realistic video sequences with ground truth maps of depth, disparity, segmentation masks, surface normals, optical flow, object pose, and camera parameters.

Owing to this outstanding development, our research team won the Outstanding Paper Award given by the joint AE-CAI/CARE/OR2.0 MICCAI workshop on 4th October 2020.

Owing to this outstanding development, our research team won the Outstanding Paper Award given by the joint AE-CAI/CARE/OR2.0 MICCAI workshop on 4th October 2020.

In the presentation at the workshop of Medical Image Computing and Computer Assisted Intervention 2020 (MICCAI 2020), our researchers not only presented the example of endoscopic data that can be generated by using this tool, but also demonstrated one of potential applications where the generated data has been used to train and evaluate state-of-the-art 3D reconstruction algorithms.

Being able to generate realistic endoscopic datasets efficiently, VisionBlender promises an exciting step forward in robotic surgery.

VisionBlender is an open source project. More information can be found on VisionBlender's GitHub page.

Article supporters

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Erh-Ya (Asa) Tsui

Enterprise