A Novel AI Framework of Image Reconstruction for Minimally Invasive Surgery

Our Hamlyn researchers proposed a new AI unsupervised deep learning framework for image reconstruction, aiming to assist minimally invasive surgery.

Minimally Invasive Surgery (MIS) offers several advantages over traditional open surgery including less postoperative pain, fewer wound complications and reduced hospitalisation.

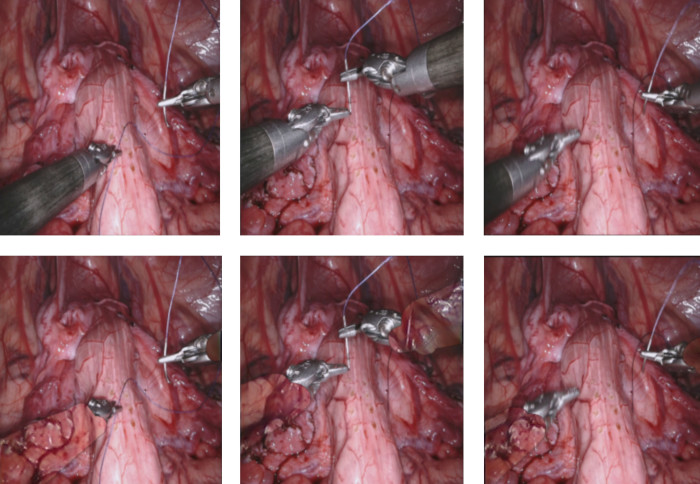

However, it comprises of multiple challenges, among the greatest of the challenges of MIS is the inadequate visualisation of the surgical field through keyhole incisions.

Moreover, occlusions caused by instruments or bleeding can completely obfuscate anatomical landmarks, reduce surgical vision and lead to iatrogenic injury.

A Novel AI Unsupervised End-to-end Deep Learning Framework for Image Reconstruction

To solve this problem, our research team at the Hamlyn Centre proposed a new unsupervised end-to-end deep learning framework, based on Fully Convolutional Neural (FCN) networks to reconstruct the view of the surgical scene under occlusions and provide the surgeon with intra-operative see-through vision in these areas.

A novel generative densely connected encoder-decoder architecture has been designed which enables the incorporation of temporal information by introducing a new type of 3D convolution, the so called 3D partial convolution, to enhance the learning capabilities of the network and fuse temporal and spatial information.

The dense connections within the network allow the decoder to utilise all the information gathered from each layer to reconstruct and refine the output in a hierarchical manner.

To train the proposed framework, a unique loss function has been proposed which combines feature matching, reconstruction, style, temporal and adversarial loss terms, for generating high fidelity image reconstructions. Combining multiple losses enables the network to capture vital features essential for reconstruction.

This proposed method can reconstruct the underlying view obstructed by irregularly shaped occlusions of divergent size, location and orientation, and has been validated on in-vivo MIS video data, as well as natural scenes on a range of occlusion-to-image (OIR) ratios.

Furthermore, through the comparative analysis with the state-of-the-art video in-painting models, the result of the evaluation assessment has verified the superiority of this proposed method and its potential clinical value.

Our research team is planing to further focus on making the proposed model real time for online occlusion removal and tackle other challenging occlusions in surgery, such as smoke and blood.

Dr. Giannarou and Mr. Tukra are supported by the Royal Society (UF140290 and RGF\EA\180084) and the NIHR Imperial Biomedical Research Centre (BRC). Mr. Marcus is supported by the NIHR University College London (UCL) Biomedical Research Centre (BRC) and the Wellcome/EPSRC Centre for Interventional and Surgical Sciences (WEISS). This research 'See-Through Vision with Unsupervised Scene Occlusion Reconstruction' was published on IEEE Transactions on Pattern Analysis and Machine Intelligence, 10 February 2021.

Article supporters

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Erh-Ya (Asa) Tsui

Enterprise