A Novel Smart Operating Room Concept: Gaze-Controlled Based Robotic Scrub Nurse

Hamlyn researchers proposed a novel smart operating room concept by using gaze-controlled based robotic scrub nurse, aiming to aid surgical process.

Within laparoscopic surgery, robotic devices have been developed to improve clinical outcomes, in so consolidating the shifts towards minimally invasive surgery (MIS). According to recent research reports, assistive robotic devices (ARD) have been evidentially proved that they do improve patient outcomes in surgery.

ARD in surgery describes machinery that is controlled by the surgeon in support of surgical task delivery. In general, ARD afford surgical teams’ touch-less interaction, enhanced information accessibility and task execution. It thus could be seen as a “third hand” for surgeon in ad-hoc intra-operative surgical process.

In comparison with conventional laparoscopy, ARD can offer the surgical team to perform wider tasks and more degrees of motion. Moreover, ARD can also play a role to improve staff and patient safety, workflow and overall team performance.

On the other hand, robotic scrub nurses (RSN) support the surgeon in selecting and delivering surgical instrument. Although there are currently several advanced developments in RSN on voice recognition interface (VRI) and hand gestures recognition, the limitation of both approaches cannot be neglected (such as the practicality of disruptive hand gestures and failures in voice recognition when scrubbed in noisy operating theatres).

A Novel Smart Operating Room Concept: Gaze-Controlled Based RSN

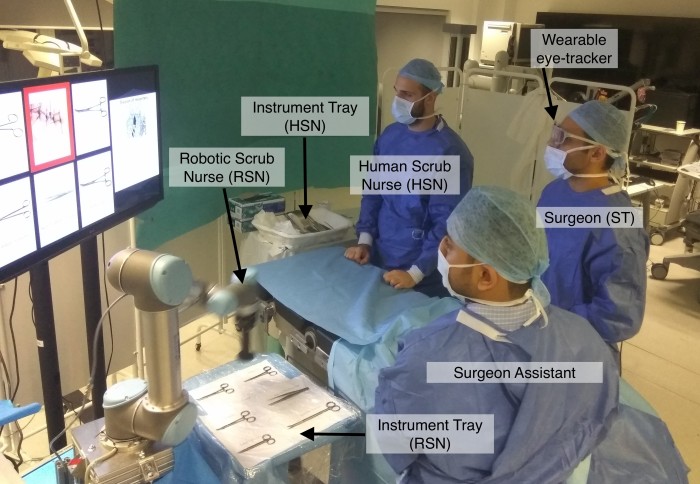

![]() In the light of this, our researchers at the Hamlyn Centre proposed a novel perceptually-enabled smart operating room concept, based on gaze controlled RSN. This proposed concept allows the surgeon unrestricted mobility, as naturally occurring intra-operatively.

In the light of this, our researchers at the Hamlyn Centre proposed a novel perceptually-enabled smart operating room concept, based on gaze controlled RSN. This proposed concept allows the surgeon unrestricted mobility, as naturally occurring intra-operatively.

Not only can gaze be tested for its use as a sensory modality to execute RSN tasks intra-operatively, but the integration of eye-tracking glasses (ETG) offer the additional advantage over other sensory based interaction of being able to measure real-time surgeon visual behaviour.

Moreover, correlations can be made with intra-operative surgeon mental workload, concentration and fatigue via standard measures including blink rate, gaze drift, and pupillary dilatation.

This interface enables dynamic gaze-based surgeon interaction with the RSN to facilitate practical streamlined human–computer interaction in the hope to improve workflow efficiency, patient and staff safety and address assistant shortages.

Validation of the Proposed Concept (Gaze-Controlled Based RSN) in a Operating Room

To validate this proposed concept, our research team tested an eye-tracking based RSN in a simulated operating room based on a novel real-time framework for theatre-wide 3D gaze localisation in a mobile fashion.

The procedure was as follows:

- Surgeons performed segmental resection of pig colon and hand-sewn end-to-end anastomosis while wearing ETG assisted by distributed RGB-D motion sensors.

- To select instruments, surgeons fixed their gaze on a screen, initiating the RSN to pick up and transfer the item.

Furthermore, comparison was made between the task with the assistance of a human scrub nurse (HSNt) versus the task with the assistance of robotic and human scrub nurse (R&HSNt). Task load (NASA-TLX), technology acceptance (Van der Laan’s), metric data on performance and team communication were also measured.

Research Findings

Overall, our researchers found that:

- a significant reduction in verbal communication frequency between surgeons and human scrub nurse, accounted by task related communication, where the surgeon asked for an instrument or operative command. (This may be very helpful in the situation of Covid-19 pandemic, where the voice clarity of surgeons may reduce through a filtering surgical mask.)

- the RSN may limit avoidable staff exposure to infected patients during aerosol generating procedures (which is particularly important while surgeons are performing surgery during the global pandemics, such as current Covid-19 outbreak).

- Social communication behaviours amongst staff did not significantly differ intra-operatively. On the contrary, this communication type may enhance team person-ability thereby improving team dynamics and reducing communication failures.

With regard to the future work, our research team aims to expand the eye-tracking RSN to recognise and track instruments in real-time, enabling workflow segmentation, task phase recognition and task anticipation.

This research was supported by the NIHR Imperial Biomedical Research Centre (BRC), (Ahmed Ezzat, Alexandros Kogkas, Josephine Holt, Rudrik Thakkar, Are Darzi and George Mylonas, "An eye?tracking based robotic scrub nurse: proof of concept", Surgical Endoscopy, June 2021).

Article supporters

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Erh-Ya (Asa) Tsui

Enterprise