‘Nanomagnetic’ computing can provide low-energy AI, researchers show

Researchers have shown it is possible to perform artificial intelligence using tiny nanomagnets that interact like neurons in the brain.

The new method, developed by a team led by Imperial College London researchers, could slash the energy cost of artificial intelligence (AI), which is currently doubling globally every 3.5 months.

How the magnets interact gives us all the information we need; the laws of physics themselves become the computer. Kilian Stenning

In a paper published today in Nature Nanotechnology, the international team have produced the first proof that networks of nanomagnets can be used to perform AI-like processing. The researchers showed nanomagnets can be used for ‘time-series prediction’ tasks, such as predicting and regulating insulin levels in diabetic patients.

Artificial intelligence that uses ‘neural networks’ aims to replicate the way parts of the brain work, where neurons talk to each other to process and retain information. A lot of the maths used to power neural networks was originally invented by physicists to describe the way magnets interact, but at the time it was too difficult to use magnets directly as researchers didn’t know how to put data in and get information out.

Instead, software run on traditional silicon-based computers was used to simulate the magnet interactions, in turn simulating the brain. Now, the team have been able to use the magnets themselves to process and store data – cutting out the middleman of the software simulation and potentially offering enormous energy savings.

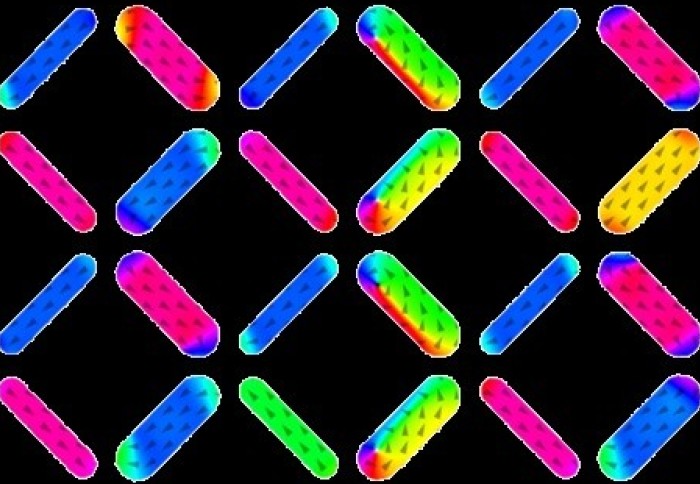

Nanomagnetic states

Nanomagnets can come in various ‘states’, depending on their direction. Applying a magnetic field to a network of nanomagnets changes the state of the magnets based on the properties of the input field, but also on the states of surrounding magnets.

The team, led by Imperial Department of Physics researchers, were then able to design a technique to count the number of magnets in each state once the field has passed through, giving the ‘answer’.

Co-first author of the study Dr Jack Gartside said: “We’ve been trying to crack the problem of how to input data, ask a question, and get an answer out of magnetic computing for a long time. Now we’ve proven it can be done, it paves the way for getting rid of the computer software that does the energy-intensive simulation."

Co-first author Kilian Stenning added: “How the magnets interact gives us all the information we need; the laws of physics themselves become the computer.”

Team leader Dr Will Branford said: “It has been a long-term goal to realise computer hardware inspired by the software algorithms of Sherrington and Kirkpatrick. It was not possible using the spins on atoms in conventional magnets, but by scaling up the spins into nanopatterned arrays we have been able to achieve the necessary control and readout.”

Slashing energy cost

AI is now used in a range of contexts, from voice recognition to self-driving cars. But training AI to do even relatively simple tasks can take huge amounts of energy. For example, training AI to solve a Rubik’s cube took the energy equivalent of two nuclear power stations running for an hour.

Much of the energy used to achieve this in conventional, silicon-chip computers is wasted in inefficient transport of electrons during processing and memory storage. Nanomagnets however don’t rely on the physical transport of particles like electrons, but instead process and transfer information in the form of a ‘magnon’ wave, where each magnet affects the state of neighbouring magnets.

This means much less energy is lost, and that the processing and storage of information can be done together, rather than being separate processes as in conventional computers. This innovation could make nanomagnetic computing up to 100,000 times more efficient than conventional computing.

AI at the edge

The team will next teach the system using real-world data, such as ECG signals, and hope to make it into a real computing device. Eventually, magnetic systems could be integrated into conventional computers to improve energy efficiency for intense processing tasks.

Their energy efficiency also means they could feasibly be powered by renewable energy and used to do ‘AI at the edge’ – processing the data where it is being collected, such as weather stations in Antarctica, rather than sending it back to large data centres.

It also means they could be used on wearable devices to process biometric data on the body, such as predicting and regulating insulin levels for diabetic people or detecting abnormal heartbeats.

-

‘Reconfigurable Training and Reservoir Computing in an Artificial Spin-Vortex Ice via Spin-Wave Fingerprinting’ by Jack C. Gartside, Kilian D. Stenning, Alex Vanstone, Holly H. Holder, Daan M. Arroo, Troy Dion, Francesco Caravelli, Hidekazu Kurebayashi, and Will R. Branford is published in Nature Nanotechnology.

Article supporters

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Hayley Dunning

Communications Division