Can you hear the shape of physics?

A new framework and tool have been developed to 'hear' the shapes in noisy physics data.

You can't hear the shape of a drum, but can you perhaps hear the shape of physics? As it turns out, the shapes of the Standard Model, the bedrock of modern physics, are indeed audible. You just need AI headphones, some earth-moving mathematics, and a sufficiently noisy collider.

Colliders, like the large hadron one at CERN, probe for novel physical phenomenon by banging super-fast particles together and observing the various interactions that play out in such collisions: the music of the cosmos can be heard if you know how to listen.

Unfortunately, these experiments often produce humongous datasets and looking for interesting shapes and patterns in them is no child's play. Given the immense complexity of these interactions, the high-energy physics community has traditionally approached data analysis via custom-built, often ad-hoc methods, lacking a unified theory or recipe for such endeavours.

In a recent work, Akshunna S. Dogra (Imperial), Rikab Gambhir (IAIFI), and other IAIFI researchers, have proposed such a unified framework. They prove that optimal transport theory provides the best notation for denoting the music in physics, and that the Earth Mover's distance (also known as Wasserstein distance) provides the best headphones, thus obtaining a generalized approach for shape observable analysis in physical datasets.

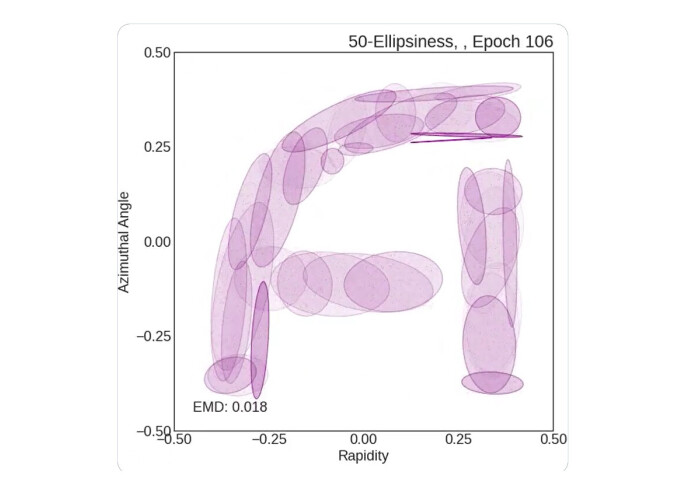

They have also used their framework to build a tool called SHAPER, taking inspiration from the AI field of dictionary learning to build an efficient way for listening.

This exciting work is now available to read at the Journal of High Energy Physics 2023 (195)

But that's not all, you can also hear more than just physics using SHAPER. For example, if you would like to hear the sound of your institution's logo, take a leaf out of IAIFI and FinitePhysicist's book.

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Press Office

Communications and Public Affairs

- Email: press.office@imperial.ac.uk