Using deep learning to improve rapid cellular imaging

by Jacklin Kwan

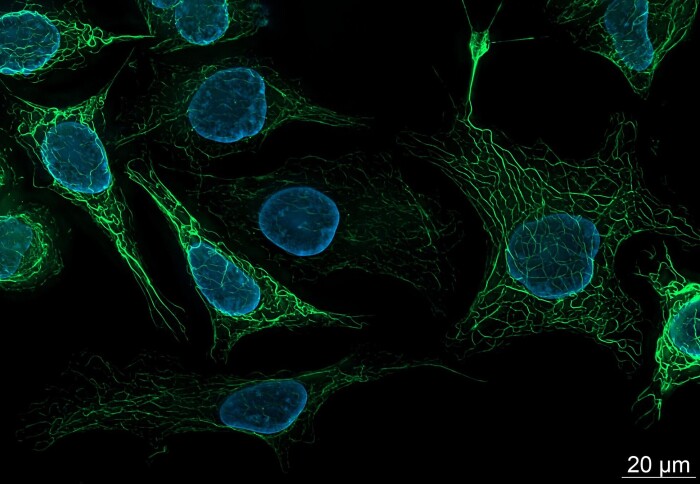

Human-derived cells, stained with green and blue fluorescent dyes. Image attribution: ZEISS Microscopy from Germany.

Researchers have combined neural networks with a wide range of microscopy techniques to improve how scientists peer into cells

Neural networks are transforming the way that scientists capture and study the processes that power life. In a study published in Nature Methods on 18 January, researchers from Imperial College London and University College London (UCL) have trained machine learning models to predict the evolution of rapid cellular events.

These events are difficult to capture in their entirety without damaging live cell samples.

Now, researchers only need to capture half as many frames compared to traditional imaging methods, and use neural networks to accurately generate the rest of the image set.

Dr Martin Priessner, the study's lead author from the Department of Chemistry, said: “The advancements in deep neural network technology enable us now to closely monitor delicate cellular processes over longer durations and simultaneously reduce the strain on live samples.”

We wanted to demonstrate how useful and versatile the approach of these neural networks could be. Dr Romain Laine University College London

The two neural network architectures in the study were able to successfully reconstruct datasets from four microscopy techniques.

“We wanted to demonstrate how useful and versatile the approach of these neural networks could be,” said co-lead author, Dr Romain Laine from UCL.

The observer effect

Live-cell imaging allows scientists to study and visualise how life functions at sub-cellular levels. By observing biological processes as they happen in real-time, researchers could better understand the complex interplay between different cellular organelles.

However, capturing videos of cells is often difficult and invasive. Some microscopy techniques require the use of fluorescent dyes to label cells, but these dyes lose their fluorescence over time as they are exposed to light – a process known as photobleaching.

Another challenge is phototoxicity where light, such as ultraviolet light, can damage and even kill living cells.

“You can crank up your laser power to get a better image quality, but that causes your dye to get bleached or your cell to die quickly,” said Dr Laine.

“There’s always a tug of war between keeping your sample alive and getting an image of good quality,” he said.

Researchers are stuck with a difficult trade-off between capturing a shorter video of high quality, low-noise data, or a longer video with more noise. However, there may be a way for researchers to use artificial intelligence to have both.

The team coined the term ‘content-aware frame interpolation’ (CAFI) to describe machine learning-based techniques aimed at increasing the frame rate of microscopy videos by generating intermediate frames between existing ones.

This was already commonly done in computer graphics and video processing, but was never applied to microscopy.

This technique can make videos smoother. For example, people can convert a video from 30 frames per second (fps) to 60 fps by estimating the motion of different objects between two consecutive frames.

Using neural networks to fill in gaps

Deep learning networks can be trained to understand and predict object motions in a video. The researchers took existing machine learning models trained on high-quality video clips and fine-tuned them on live cell microscopy videos.

One surprising finding was that the networks just trained on video clips already performed notably better than other classical interpolation techniques... Dr Martin Priessner Department of Chemistry

To test the performance of their models, the scientists then took other movies of cells and removed frames – seeing whether their models could successfully reconstruct the missing data.

“One surprising finding was that the networks just trained on video clips already performed notably better than other classical interpolation techniques and after fine-tuning on the specific use cases the network’s performance improved even further,” said Martin.

The two models used different network architectures known as Zooming SlowMo (ZS) and Depth-Aware Video Frame Interpolation (DAIN), which have different strengths and weaknesses depending on the specific use-case.

The study shows that the models are able to successfully improve the resolution of videos taken with four different microscopy techniques, including brightfield microscopy and fluorescence microscopy which are widely used to study cell and tissue structure.

“Of course, there’s no such thing as a free lunch here,” said Dr Laine, pointing out that the performance of models strongly depends on what they learn from their training datasets. “If our model is applied to new data that is very different from the data we used to train it, then it may produce artefacts,” he said.

-

'Content-aware frame interpolation (CAFI): deep learning-based temporal super-resolution for fast bioimaging' by Martin Priessner et al. is published in Nature Methods.

Article supporters

Article text (excluding photos or graphics) © Imperial College London.

Photos and graphics subject to third party copyright used with permission or © Imperial College London.

Reporter

Jacklin Kwan

Faculty of Natural Sciences