Research Projects

- Optimisation, Control & RL

- ML Applied to Bioprocesses

- Opt of Stochastic Processes

- ML based Optimisation

Optimisation, Control & Reinforcement Learning

In the context of Process Engineering, there are problems which suffer from three conditions: 1) there is no precise model known for the process under consideration (plant-model mismatch), leading to inaccurate predictions and convergence to suboptimal solutions, 2) the process presents disturbances and 3) the system is risk-sensitive hence exploration is inconvenient or dangerous. A simple example of this are chemical reactors and aircrafts. To address these problems there are two main approaches, knowledge based schemes (where models are derived from physical, biological and chemical information) and data-driven optimization algorithms. On one hand, knowledge based methods work very well for problems with disturbances, and under some assumptions present convergence guarantees, however, they struggle when plant-model mistmatch is presents (which is the case with most models). On the other hand, data-driven optimization algorithms can handle plant-model mismatch and process disturbances in practice, but they do not have convergence guarantees, are data-hungry, and easily violate constraints due to their exploratory nature. Under this predicament we develop new efficient algorithms that combine both approaches through hybrid modelling, statistical modelling (e.g. Gaussian processes) and reinforcement learning.

Reinforcement Learning (RL) is a subfield of Artificial Intelligence (AI) which trains Machine Learning models to make optimal decisions. This is done in such a way that the model (or agent; or controller in a process engineering domain) learns how to take optimal actions as it explores the environment in which it resides.

RL has received a lot of attention, notable examples are “machines” learning to play board games such as Go, or videogames such as DOTA 2 or Starcraft. Furthermore, game-playing is not all that RL is useful for. In previous work we have already optimized chemical processes under this same philosophy.

Reinforcement learning was designed to address the optimisation of stochastic dynamic systems. It so happens that in reality chemical processes are stochastic (due to process disturbances) and in many cases operated in dynamic mode. The difference with traditional RL applied in mainstream AI resides in the fact that RL is “data hungry” and does not consider constraints. This is a major drawback given that processes generally rely on much less data, while unbounded exploration (without constraints) can be dangerous or costly. This project aims to design a new RL algorithm that can be used to optimize complex chemical and biochemical processes which are still unresolved today.

Selected Publications:

- Reinforcement Learning for Batch Bioprocess Optimization, 2020 (preprint)

- Chance Constrained Policy Optimization for Process Control and Optimization, 2020

- Constrained Model-Free Reinforcement Learning for Process Optimization, 2020 (Neurips Workshop video)

- Modifier-Adaptation Schemes Employing Gaussian Processes and Trust Regions for Real-Time Optimization, 2019

Machine Learning Applied to Bioprocesses

Bio-production processes (e.g. yeast, algae and cyanobacterial production) provide an opportunity to replace traditional fossil fuels and petrol-based chemical. However, compared to chemical plants, bio-production systems are characterised by high production uncertainty due to process noise and complex biochemical reaction kinetics. To accurately estimate optimal operating conditions during an ongoing bioprocess, constructing state-of-the-art optimisation frameworks that consider stochasticity is of critical importance to modern and future industrial practice.

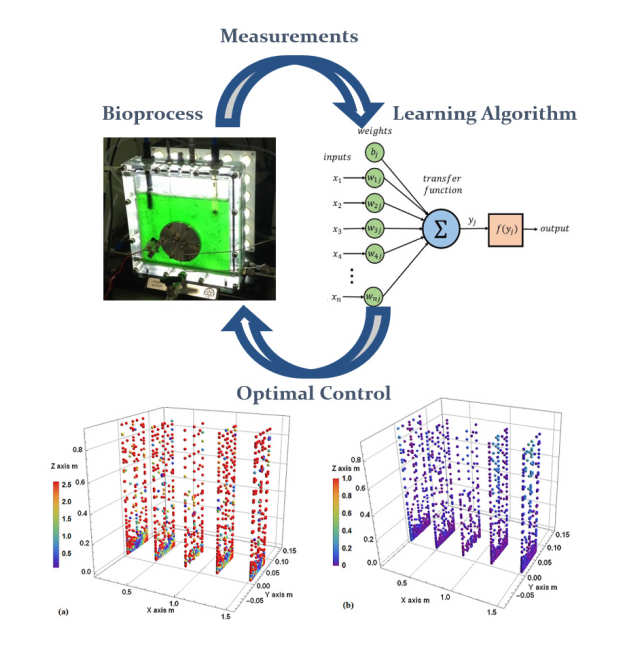

We integrate cutting-edge data-driven (machine learning) and physical modelling approaches to construct auto-corrective modelling frameworks that can accurately predict process behaviours and evaluate optimal control scenarios based on the low quality and scarce measurements obtained from industrial plants.

We integrate cutting-edge data-driven (machine learning) and physical modelling approaches to construct auto-corrective modelling frameworks that can accurately predict process behaviours and evaluate optimal control scenarios based on the low quality and scarce measurements obtained from industrial plants.

This research explores state-of-the-art neural network techniques and other machine learning methods to construct data-driven multi-level modelling frameworks capable of detecting measurement noise, classifying ongoing process kinetics, simulating system dynamics, and computing optimisation strategies for general industrial bioprocesses.

This project is designed to resolve urgent challenges arising from the current bio-industry in which existing bioprocess plants have been found to be of low performance in terms of productivity and raw material utilisation.

Selected Publications:

- Comparison of physics-based and data-driven modelling techniques for dynamic optimisation of fed-batch bioprocesses, 2019

- Dynamic modeling and optimization of sustainable algal production with uncertainty using multivariate Gaussian processes, 2018

- Deep Learning based Surrogate Modeling and Optimization for Microalgal Biofuel Production and Photobioreactor Design, 2018

- Review of advanced physical and data‐driven models for dynamic bioprocess simulation: Case study of algae‐bacteria consortium wastewater treatment, 2018

Optimization for Stochastic Processes

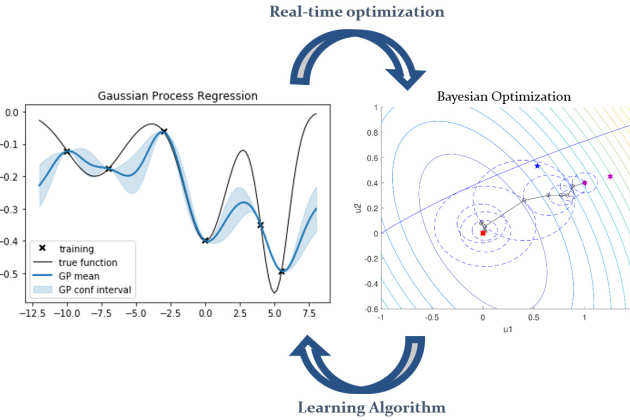

Optimising stochastic dynamic processes is one of the major challenges the process systems community faces today. In the Optimisation and Machine Learning for Process Systems Engineering Group, we use robust optimisation algorithms with dynamic models (either knowledge base, data-drive, or hybrid) to create efficient dynamic optimisation strategies that can be used to optimise nonlinear and nonconvex systems.

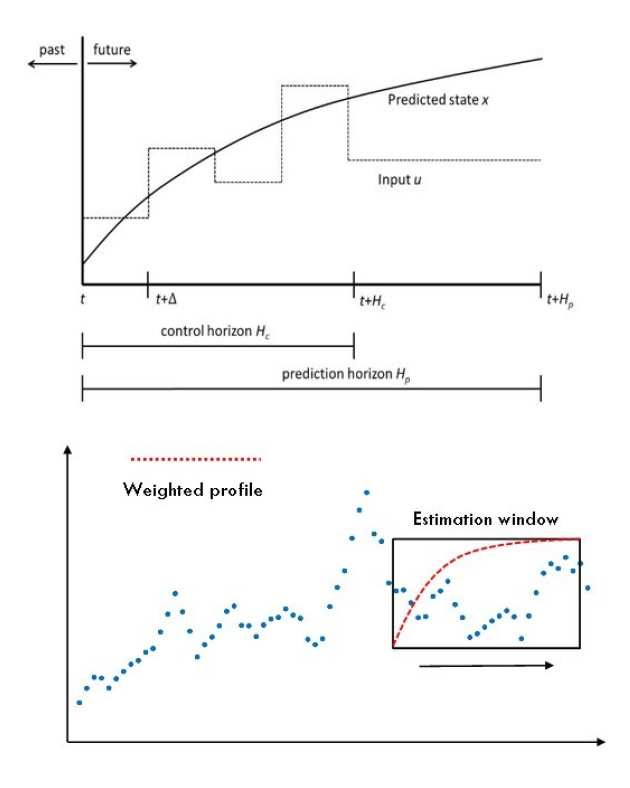

Therefore, we combine model predictive control with data-driven modeling and optimisation strategies. Nonlinear model predictive control (NMPC) is one of the few control methods that can handle multivariable nonlinear control systems with constraints. Gaussian processes (GPs), a technique popularised by the machine learning community, present a powerful tool to identify the required plant model and quantify the residual uncertainty of the plant-model mismatch given its probabilistic nature . It is crucial to account for this uncertainty, since it may lead to worse control performance and constraint violations. In this way, we develop efficient controllers that are able to address stochastic dynamic processes to a high degree of certainty.

Selected Publications:

- Modifier Adaptation Meets Bayesian Optimization and Derivative-Free Optimization, 2021

- Reinforcement Learning for Batch Bioprocess Optimization, 2020 (preprint)

- Modifier-Adaptation Schemes Employing Gaussian Processes and Trust Regions for Real-Time Optimization, 2019

Machine learning based optimisation

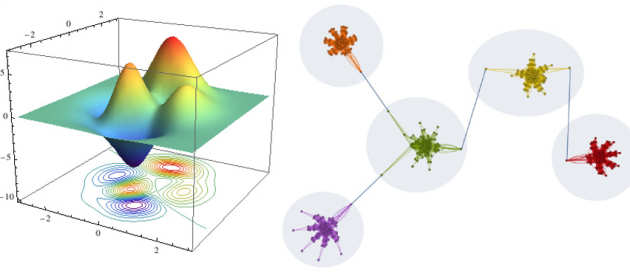

The development of innovative optimisation algorithms with the use of data-driven techniques (machine learning) in an important area of opportunity given the thriving research of the artificial intelligence (AI) community. In this line of research, we develop new algorithms, building on current state-of-the-art artificial intelligence and optimisation techniques. The newly creates algorithms are further adopted to resolve the critical challenges arising from industrial processes and operation engineering. The research outcomes will be applied into a variety of applications, including modelling and optimising highly complex and difficult to describe systems.

Selected Publications:

- Modifier Adaptation Meets Bayesian Optimization and Derivative-Free Optimization, 2021

- On the Solution of Differential-Algebraic Equations through Gradient Flow Embedding, 2017

- Automated Structure Detection for Distributed Process Optimization, 2016

- ICRS-Filter: A Randomized Direct Search Algorithm for Constrained Nonconvex Optimization Problems, 2016